Pew Research Center’s international surveys have typically not given respondents the explicit option to say that they don’t know the answer to a particular question. In surveys conducted face-to-face or by phone, we’ve instead allowed respondents to voluntarily skip questions as they see fit. Similarly, in self-administered surveys conducted online, respondents can skip questions by selecting “Next page” and moving on. But the results of many survey experiments show that people are much less likely to skip questions online than when speaking to interviewers in person or on the phone.

This skipping effect raises a question for cross-national surveys that use different modes of interviewing people: Would it be better to give respondents an explicit “Don’t know” option in online surveys for potentially low-salience questions even if the same is not done in face-to-face or phone surveys?

To explore this question, we conducted multiple split-form experiments on nationally representative online survey panels in the United States and Australia.1 In both countries, we asked people about international political leaders and gave half of the sample the option to choose “Never heard of this person” while withholding this option for the other half of respondents.

Similarly, we asked questions in Australia assessing various elements of Chinese soft power such as the country’s universities, military, standard of living, technological achievements and entertainment. We offered “Not sure” as a response option to half the sample, while the other half was not shown this option.

In the sections that follow, we’ll evaluate the impact of the “Never heard of this person” and “Not sure” options (which we’ll jointly refer to as “Don’t know” options) and assess whether adding such options can improve comparability across survey modes.

How does adding a “Don’t know” option affect views of world leaders?

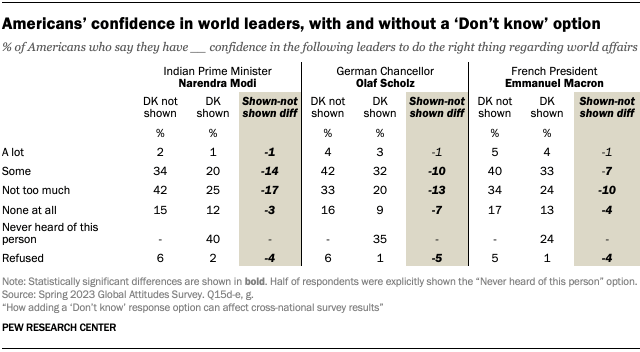

In both the U.S. and Australia, giving respondents the option to say they had never heard of certain world leaders resulted in more of them choosing that option. Specifically, we tend to see a shift of topline results from the more moderate response options – “Some confidence” and “Not too much confidence” – to the “Don’t know” option. This was especially the case for leaders who received the largest shares of “Don’t know” responses such as French President Emmanuel Macron, German Chancellor Olaf Scholz and Indian Prime Minister Narendra Modi.

For example, in the U.S., when respondents were asked about their confidence in Modi without a “Don’t know” option, 34% said they had some confidence in the Indian leader and 42% said they had not too much confidence in him. But when we offered respondents the option to say they had never heard of Modi, the share saying they had some confidence in him dropped 14 percentage points and the share saying they had not too much confidence in him dropped 17 points. On the other hand, the share of respondents who expressed either of the two more forceful response options – that they had a lot of confidence in Modi or no confidence at all in him – were comparable whether the “Don’t know” option was presented or not.

While Australians were generally less likely than Americans to say they had never heard of the leaders in question, the response patterns were broadly similar between the two countries. For these lesser-known leaders, the share of respondents who simply refused to answer the question also decreased when respondents were able to say they did not know the leader.

The experiment in the U.S. and Australia allowed us to observe other patterns. For example, in both countries, the groups most likely to choose the “Don’t know” answer option included women, people without a college degree and the youngest respondents.

How does adding a “Don’t know” option affect views of China’s soft power?

To assess how a “Don’t know” option might affect a different type of question, we ran the same experiment on a battery of questions in Australia that asked respondents to rate China’s universities, standard of living, military, technological achievements and entertainment (including movies, music and television). Respondents could rate each of these aspects of Chinese soft power as the best, above average, average, below average or the worst compared with other wealthy nations.

Here, too, adding a “Don’t know” option resulted in a shift away from the middle answer options and toward the “Don’t know” option. For example, when asked about Chinese universities without the “Don’t know” option, 47% of Australians rated them as average. But when we included the “Don’t know” option, the share of Australians saying this dropped to 33%. Similarly, the share of Australians who rated Chinese entertainment as average fell from 40% without the “Don’t know” option to 27% with it. There were few notable differences in the other response categories.

How does adding a “Don’t know” option change how we interpret cross-national findings?

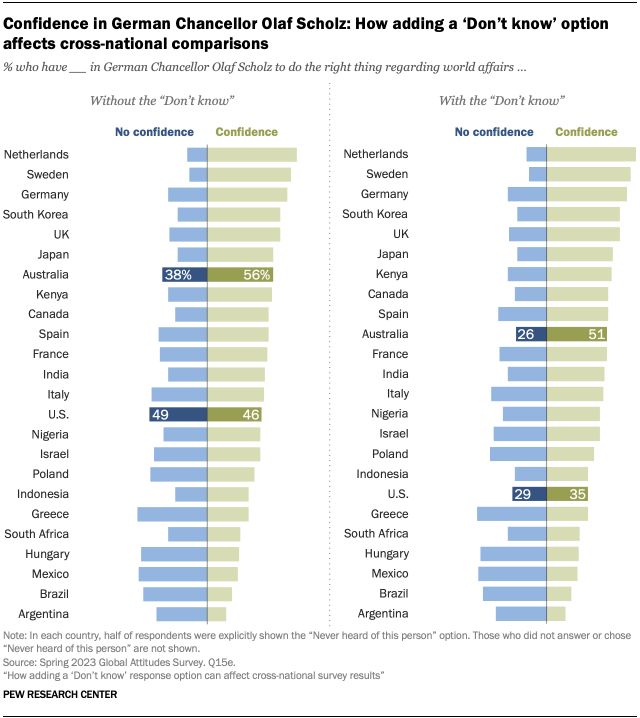

We almost always analyze our international survey data in the context of how countries compare with one another. For example, we’d like to know if people in a certain country are the most or least likely to have confidence in a given leader. However, as shown above, including an explicit “Don’t know” option can meaningfully change our results and make it challenging to compare countries against one another in this way.

For the questions used in this experiment, adding a “Don’t know” option generally did not result in major shifts in terms of how countries compare with one another. The main exception was Americans’ confidence in Germany’s leader, Scholz.

Yet even in his case, the inclusion of the “Don’t know” option did not substantially change the major conclusions we might draw from cross-national comparisons: Americans’ confidence in Scholz is relatively low, especially compared with the broad confidence he inspires in the Netherlands, Sweden and Germany itself.

We see a few potential reasons as to why there is a limited effect on the overall pattern of results. For one, there is a wide range of opinions across the countries surveyed: The share expressing confidence in Scholz ranges from 76% in the Netherlands to just 16% in Argentina. The 11-point difference between responses with and without a “Don’t know” option in the U.S., therefore, is relatively small when compared with the overall range across the other 23 countries surveyed.

Also, including the “Don’t know” response did not seem to affect just positive or negative responses. Instead, there was similar attrition from the “Some confidence” and “Not too much confidence” categories into the “Don’t know” category.

Conclusion

We believe including an explicit “Don’t know” option allows respondents to reflect their opinions more precisely in online surveys. While there may be significant effects on our topline survey results, our experiment does not indicate that adding a “Don’t know” option would greatly change the conclusions we can draw from cross-national analysis. Nor does it show that demographic groups treated question formats differently. Therefore, we’re inclined to offer a “Don’t know” option on future online surveys that are part of our cross-national survey research efforts.

While previous research shows that respondents are more likely to refuse to answer questions in face-to-face and phone surveys than in online surveys, we do see potential for further inquiry as to how phone and face-to-face respondents would treat an explicit “Don’t know” on low-salience questions.

- While the U.S. panel is fully online, the Australia panel is mixed-mode, and a small percentage (3%) of respondents elected to take the survey over the phone. They too were presented with an explicit “Don’t know” option in one condition and their responses are included in this analysis. ↩︎