At Pew Research Center, we routinely ask the people who take our surveys to give us feedback about their experience. Were the survey questions clear? Were they engaging? Were they politically neutral?

While we get a wide range of feedback on our surveys, we were surprised by a comment we received on an online survey in 2024: “You misspelled YES with FORKS numerous times.”

That comment was soon followed by several others along the same lines:

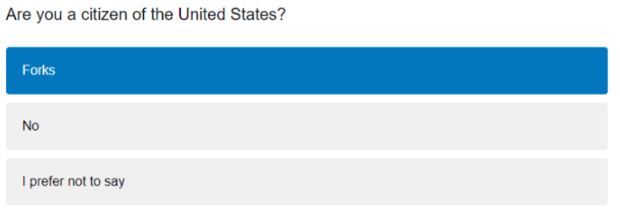

- “Please review [the] answer choices. Every ‘yes’ answer for me was listed as ‘forks’ for some reason. I.e. instead of yes/no it was forks/no.”

- “My computer had some difficulty with your answer choices. For example, instead of ‘yes’ for yes or no answers, my display showed ‘forks.’ Weird.”

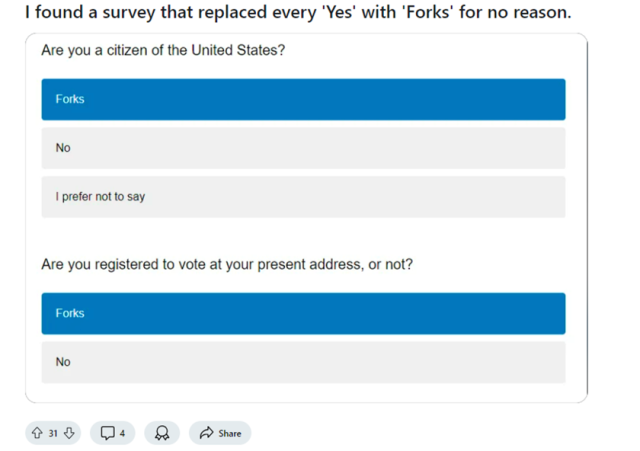

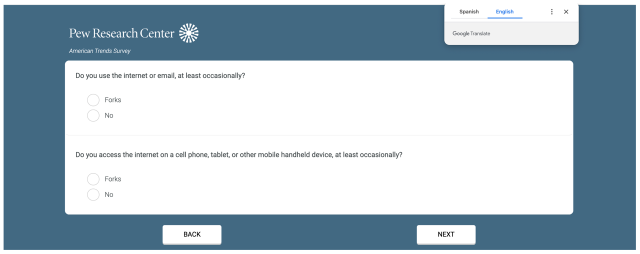

Confused by these comments, we decided to investigate. And we discovered a real problem in online surveys: Dating back to at least early 2023, a bizarre and alarming technical glitch – and yes, a hilarious one – started popping up in some organizations’ online surveys and forms, including our 2024 survey. A few Reddit users shared screenshots from a variety of surveys, where questions that should have offered answer options of “yes” and “no” instead offered the choices “forks” and “no.”

While the effects on our own survey were (fortunately) minor, we found that the problem had the potential to be more widespread than just the word “yes” changed to “forks.”

In the rest of this post, we’ll describe the bug in more detail and explain how we ensured that the data we collected in our 2024 survey is still reliable.

What caused the error

We discovered two interconnected problems that caused the “forks” error.

First, something in the underlying programming for our 2024 online poll caused web browsers to think that the survey webpage might be in Spanish, even though it was in English. Technically, this was caused by a “lightbox popup,” a design feature that allows ads – or, in our case, survey instructions – to pop up on the page when a respondent clicks a link offering additional survey instructions. Some browsers detect the lightbox popup as containing different languages, triggering a native auto-translation feature.

For some respondents, this prompted their browser to believe our survey was written in a language other than English (even though, again, it was in English) and ask if they wanted the page to be translated to English – or, we think, automatically try to translate the page to English.

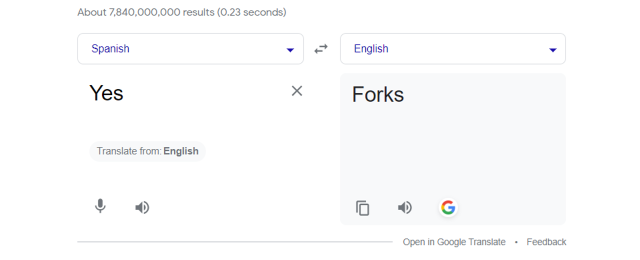

The second problem we discovered is that Google Translate contains a bizarre error. If you tell it that “yes” is a Spanish word, and then ask it to translate “yes” to English, the translation you receive is “forks.”

These two issues combined so that, in some instances, Google Chrome automatically attempted to translate our survey webpage from “Spanish” into English. Since the survey was already in English, this mostly did nothing, with the notable exception of the “yes” to “forks” translation. But there were some other small changes to the survey, too.

The translation also changed the question “As of today do you lean more to the Republican Party or the Democratic Party?” to “As of today do you read more to the Republican Party or the Democratic Party?” (“Lean” is a conjugation of the Spanish verb leer – to read.)

Other changes were subtler, such as capitalization errors we noticed when replicating the error. We did not see any feedback from survey-takers that mentioned any of these other issues.

How we solved the mystery

After receiving our first piece of feedback about the “forks” error in our 2024 survey, our vendor – the company that programs our surveys and handles our interactions with respondents – immediately checked the survey’s programming and confirmed that the word “forks” did not appear anywhere in the code.

We’d also previously subjected the survey to extensive testing before it was ever sent out to respondents. This involved several of our staffers repeatedly going through the survey as if they were respondents, looking for typos or other errors in the logic and randomization of the questions. They checked it on different devices and in different web browsers to make sure everything displayed as it should. None of these testers ever observed the word “forks” in the survey.

When we received additional comments from our respondents about the “forks” issue, we became concerned and entered problem-solving mode. We took the following steps:

- Our vendor double- and triple-checked the programming and confirmed without a doubt that the word “forks” did not appear anywhere in the programming.

- We did an internet search to see if anyone else had reported seeing this issue before. In fact, someone had: We found a couple threads on Reddit posted by people who had taken other organizations’ surveys and observed the same phenomenon.

- An employee of our vendor asked if anyone else at their company had ever observed the “forks” issue or heard reports from survey-takers. No one had.

- At this point, we felt our only option was to find a way to replicate the error. Our best guess was that some kind of user setting was interacting strangely with the webpage. Finally, a member of our team saw “forks” instead of “yes” while testing a survey.

Replicating the error gave us some clues about what could be causing it:

- Our staffer was using Google Chrome when she saw the error – but she (and others) had tested it many previous times in Chrome and did not see “forks.”

- There were a few other oddities in the programming of that particular test case, such as a lack of capitalization at the beginning of some sentences.

- When our staffer exited the survey and then rejoined it again, even using the same browser, the issues disappeared.

- We’d gone through the survey dozens or maybe even hundreds of times before we saw the error, so we determined the issue must be rare.

With a little more experimenting, we were able to identify translation as the root of the issue.

How we fixed the error

Once we discovered what was causing the bug, our vendor immediately disabled the browser translate function by adding HTML code in the survey wrapper. If survey-takers tried to use their browser’s pop-up translation feature to translate the page, nothing would happen. (As is the case in many of our surveys, participants selected their preferred language at the beginning. Those who selected Spanish were directed to a Spanish version, which we developed with the help of professional translators. We only observed the “forks” glitch in the English version of the survey.)

We also discussed whether a more extensive fix would be possible to prevent the browser translate pop-up from appearing at all. This was not possible. However, the vendor came up with an additional preventive measure: As a standard part of our survey programming, our vendor now matches the lightbox scripting to the language of the text on the webpage to prevent the browser from accidentally detecting the wrong language and triggering the auto-translate pop-up. The auto-translate pop-up may still be triggered on occasion, but the HTML in the survey wrapper prevents it from changing the content on the webpage.

How we ensured the quality of our data

Anytime something goes wrong while a survey is in the field – as it did in this instance – we need to make sure the issue didn’t skew our data. Here are the steps we took in this case:

- We assessed the scale of the glitch. We wanted to know how many people responding to the survey saw it. Fortunately, from what we could tell, this was a tiny fraction. Only 0.2% of respondents mentioned the glitch in their feedback. Of course, not everyone who saw it may have chosen to mention it in the feedback, and it’s also possible that potential survey-takers may have simply decided not to complete the survey when they saw the error.

- Still, because we went through the survey dozens or hundreds of times before we saw the issue replicated, we had reason to believe that it was seen by a tiny minority of those who took our survey. Also, every respondent who reported the issue in their feedback specifically told us that “forks” was replacing “yes,” meaning they clearly understood the intent and meaning of the response option despite the error.

- Our next step was to see if there were any unusual response patterns in the data. Every yes/no question in the survey had also been asked on a similar survey the year before. We compared the distribution of responses on each of the questions affected (looking only at those who responded to the survey online in each year) and found that the 2024 responses looked very similar to the 2023 responses. This further reassured us that the problem was confined to a very small share of survey-takers, that the error didn’t affect how people answered the questions, or both.

- We also checked the breakoff rate to see if the bug was causing respondents to log on to complete the survey but stop without completing it. Only 53 people broke off before completing the survey (2% of those who logged in to take it online). By comparison, 89 people (or 4%) broke off in the previous year’s survey, which did not have the “forks” error.

Given these findings, we saw no evidence that this bizarre error affected our survey data in any meaningful way, so we felt comfortable analyzing and releasing the data.

We hope this explanation will be helpful to you if you’ve encountered a similar issue, and we’re grateful to our survey respondents for alerting us to the error so it could be fixed.