Artificial intelligence (AI) is spreading through society into some of the most important sectors of people’s lives – from health care and legal services to agriculture and transportation.1 As Americans watch this proliferation, they are worried in some ways and excited in others.

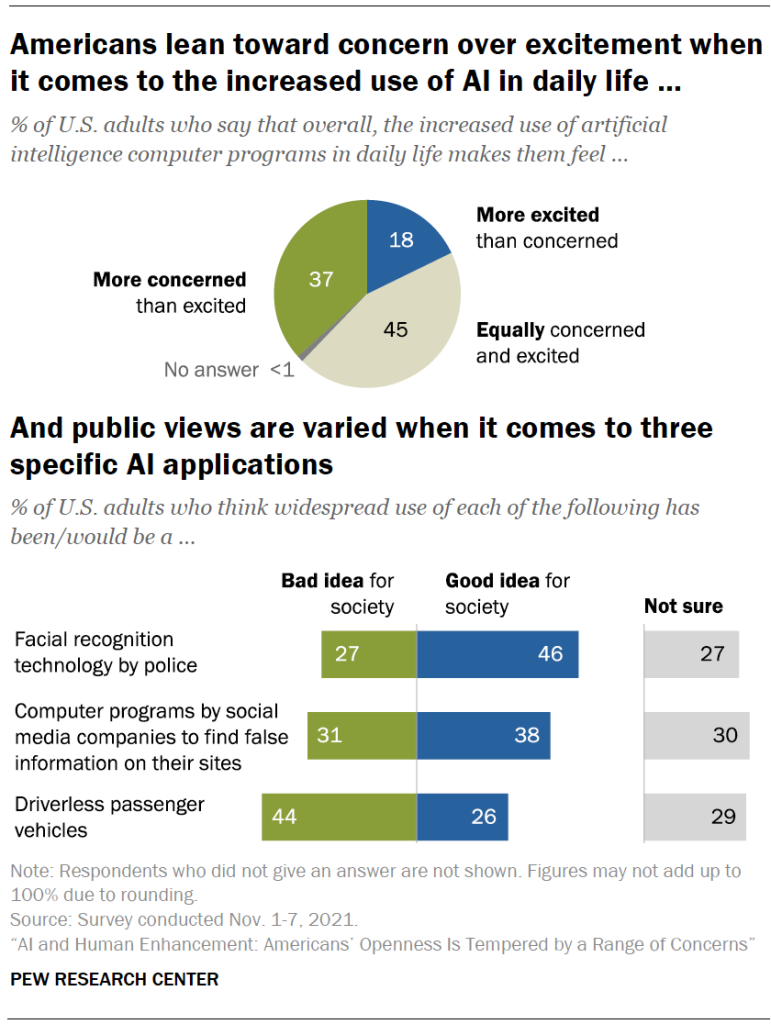

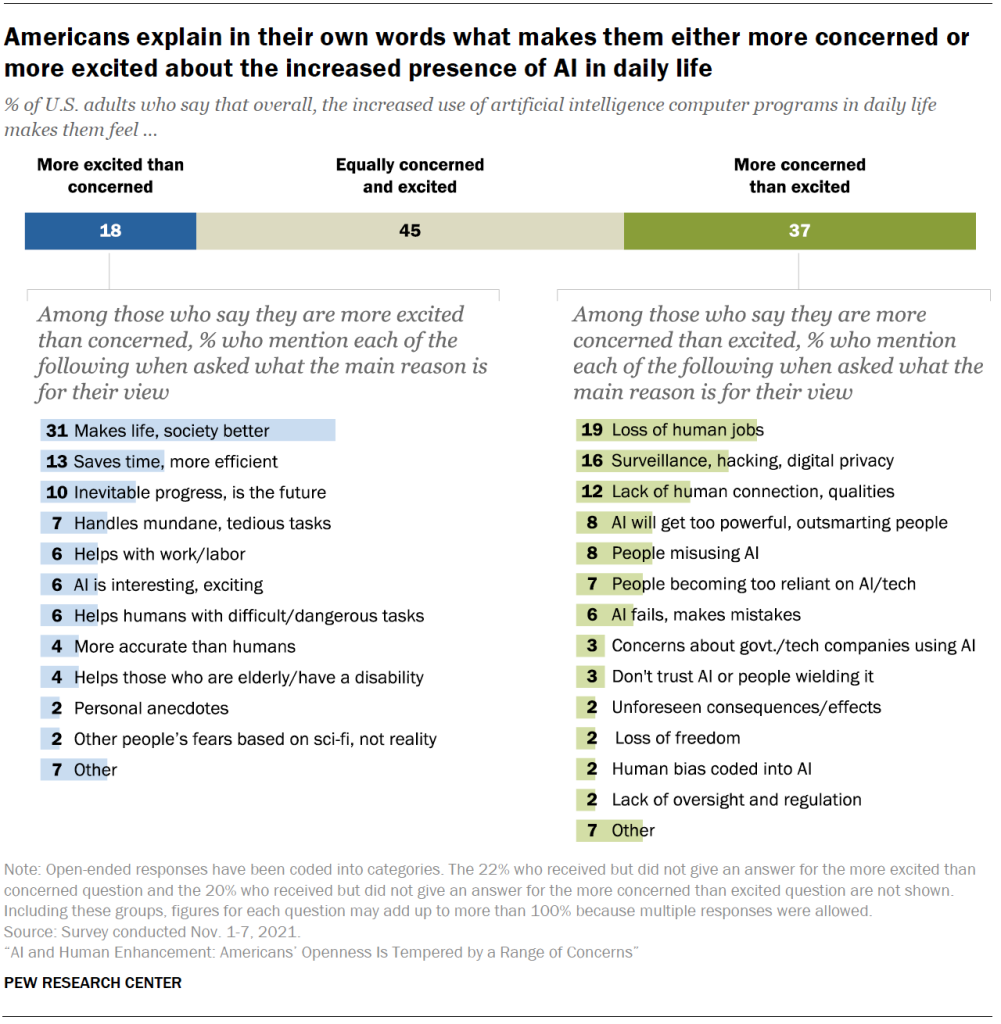

In broad strokes, a larger share of Americans say they are “more concerned than excited” by the increased use of AI in daily life than say the opposite. Nearly half of U.S. adults (45%) say they are equally concerned and excited. Asked to explain in their own words what concerns them most about AI, some of those who are more concerned than excited cite their worries about potential loss of jobs, privacy considerations and the prospect that AI’s ascent might surpass human skills – and others say it will lead to a loss of human connection, be misused or be relied on too much.

But others are “more excited than concerned,” and they mention such things as the societal improvements they hope will emerge, the time savings and efficiencies AI can bring to daily life and the ways in which AI systems might be helpful and safer at work. And people have mixed views on whether three specific AI applications are good or bad for society at large.

This chapter covers the general findings of the survey related to AI programs. It also runs through highlights from in-depth explorations of public attitudes about three AI-related applications that are fully explored in the three chapters after this. Some key findings:

How Pew Research Center approached this topic

The Center survey asked respondents a series of questions about three applications of artificial intelligence (AI):

- Facial recognition technology that could be used by police to look for people who may have committed a crime or to monitor crowds in public spaces.

- Computer programs, called algorithms, used by social media companies to find false information about important topics that appears on their sites.

- Driverless passenger vehicles that are equipped with software allowing them to operate with computer assistance and are expected to be able to operate entirely on their own without a human driver in the future.

Other questions asked respondents their feelings about AI’s increased use, the way AI programs are designed and a range of other possible AI applications.

This study builds on prior Center research including surveys on Americans’ views about automation in everyday life, the role of algorithms in parts of society and the use of facial recognition technology. It also draws on insights from several canvassings of experts about the future of AI and humans.

Use of facial recognition by police: We chose to explore the use of facial recognition by police because police reform has been a major topic of debate, especially in the wake of the killing of George Floyd in May 2020 and the ensuing protests. The survey shows that a plurality (46%) thinks use of this technology by police is a good idea for society, while 27% believe it is a bad idea and 27% say they are not sure. At the same time, 57% think crime would stay about the same if the use of facial recognition by the police becomes widespread, while 33% think crime would decrease and 8% think it would rise.

Moreover, there are divided views about how the widespread use of facial recognition technology would impact the fairness of policing. Majorities believe it is definitely or probably likely that widespread police use of this technology would result in more missing persons being found by police and crimes being solved more quickly and efficiently. Still, about two-thirds also think police would be able to track everyone’s location at all times and that police would monitor Black and Hispanic neighborhoods much more often than other neighborhoods.

Use of computer programs by social media companies to find false information on their sites: We chose to study attitudes about the use of computer programs (algorithms) by social media companies because social media is used by a majority of U.S. adults. There are also concerns about the impact of made-up information and how efforts to target misinformation might affect freedom of information. The survey finds that 38% of U.S. adults think that the widespread use of computer programs by social media companies to find false information on their sites has been a good idea for society, compared with 31% who say it is a bad idea and 30% who say they are not sure.

When asked about specific possible impacts, public views are largely negative. Majorities believe widespread use of algorithms by social media companies to find false information is definitely or probably causing political views to be censored and news and information to be wrongly removed from the sites. And majorities do not think these algorithms are causing beneficial things to happen like making it easier to find trustworthy information or allowing people to have more meaningful conversations. There are substantial partisan differences on these questions, with Republicans and those who lean toward the GOP holding more negative views than Democrats and Democratic leaners.

Driverless passenger vehicles: We chose to study public views about driverless passenger vehicles because they are being tested on roads now and their rollout on a larger scale is being debated. The survey finds that a plurality of Americans (44%) believe that the widespread use of driverless passenger vehicles would be a bad idea for society. That compares with the 26% who think this would be a good idea. Some 29% say they are not sure. A majority say they definitely or probably would not want to ride in a driverless car if they had the opportunity. Some 39% believe widespread use of driverless cars would decrease the number of people killed or injured in traffic accidents, while 31% think there would not be much difference and 27% think there would be an increase in these types of deaths or injuries.

People envision a mix of positive and negative outcomes from widespread use of driverless cars. Majorities believe older adults and those with disabilities would be able to live more independently and that getting from place to place would be less stressful. At the same time, majorities also think many people who make their living by driving others or delivering things with passenger vehicles would lose their jobs and that the computer systems in driverless passenger vehicles would be easily hacked in ways that put safety at risk.

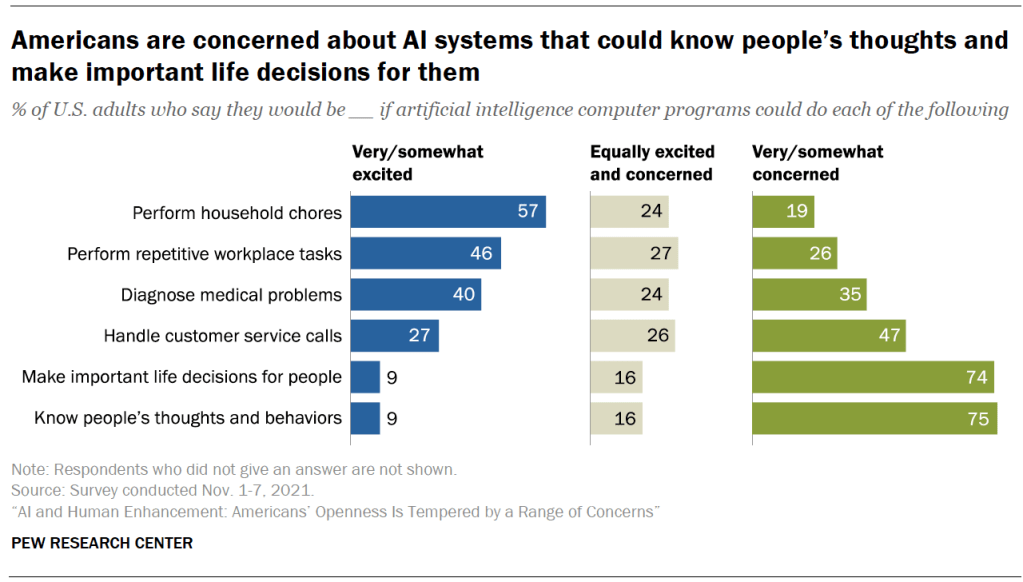

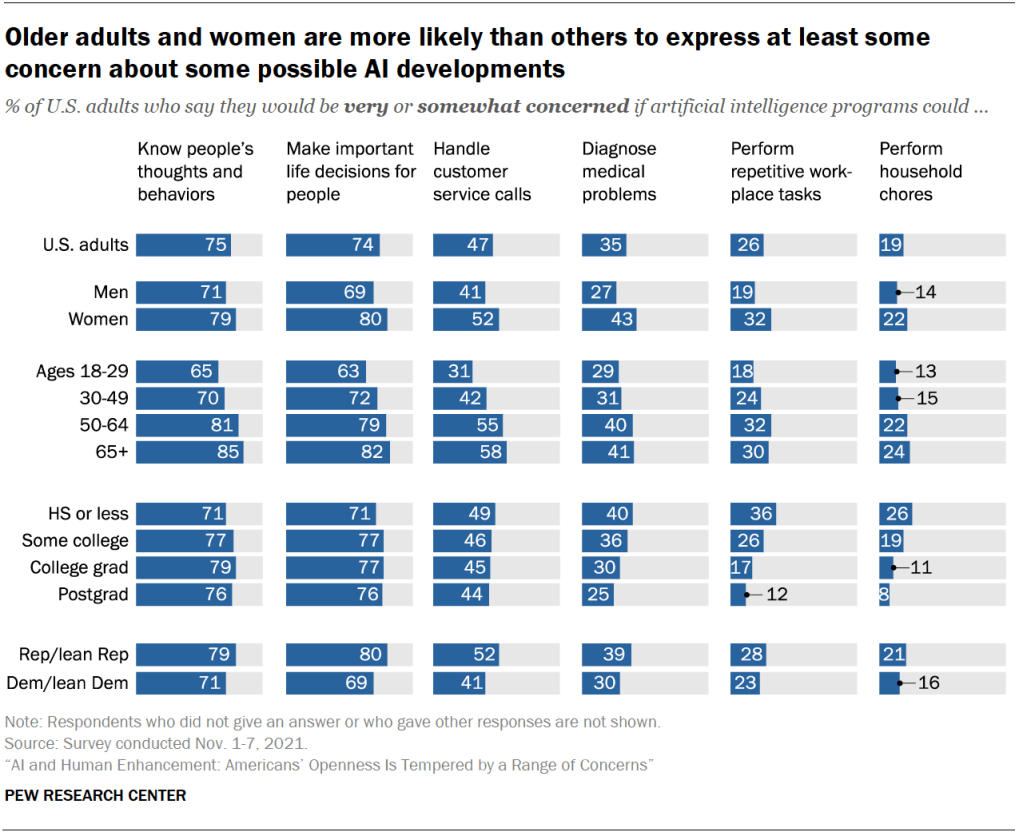

In their responses to survey questions about other possible developments in artificial intelligence, majorities express concern about the prospect that AI could know people’s thoughts and behaviors and make important life decisions for people. And when it comes to the use of AI for decision-making in a variety of fields, the public is more opposed than not to the use of computer programs (algorithms) to make final decisions about which patients should get a medical treatment, which people should be good candidates for parole, which job applicants should move on to a next round of interviews or which people should be approved for mortgages.

Still, there are some possible AI applications that draw public appeal. For example, more Americans are excited than concerned about AI applications that can do household chores. That is also the pattern when people are asked about AI apps that can perform repetitive workplace tasks.

There are patterns in views of three AI applications, but other opinions are unique to particular AI systems

The chapters following this one cover extensive findings about people’s views about three major applications of AI, including demographic differences and patterns that emerge.

Americans are split in their views about the use of facial recognition by police. Among these differences: While majorities across racial and ethnic groups say police would use facial recognition to monitor Black and Hispanic neighborhoods much more often than other neighborhoods if the technology became widespread, Black and Hispanic adults are more likely than White adults to say this. As for the way algorithms are being used by social media companies to identify false information, there are clear partisan differences in the public’s assessment of the use of those computer programs. And people believe that a mix of both positive and negative outcomes would occur if driverless cars became widely used.

When it comes to public awareness of these AI applications, majorities have heard at least a little about each of them, but some Americans have not heard about them at all and awareness can relate to views of these applications. For instance, those who have heard a lot about driverless passenger vehicles are more likely than those who have not heard anything about such cars to believe they are a good idea for society. But when it comes to the use of facial recognition by the police, those who have heard a lot are more likely to say it is a bad idea for society than those who have not heard anything about it. Views about whether the use of algorithms by social media companies to detect false information on their sites is good or bad for society lean negative among those who have heard a lot, while among those who have heard nothing, over half are not sure how they feel about this practice.

In addition to awareness being a factor associated with Americans’ views about these AI applications, there are patterns related to education. Those with higher levels of education often hold different views than those who have less formal education. For example, those with a postgraduate education are more likely than those with a high school education or less to think the widespread use of algorithms by social media companies to root out false information on the platforms and the use of driverless vehicles are good ideas for society. The reverse is true for facial recognition – those with a postgraduate degree are more likely to think its widespread use by police is a bad idea for society than those with a high school diploma or less education.

Additionally, the views of young adults and older adults diverge at times when these three AI applications are assessed. For instance, adults ages 18 to 29 are more likely than those 65 and older to say the widespread use of facial recognition by police is a bad idea for society. At the same time, this same group of young adults is more likely than those 65 and older to think the widespread use of self-driving cars is a good idea for society.

The next sections of this chapter cover the findings from the survey’s general questions about AI.

Americans more likely to be ‘more concerned than excited’ about increased use of AI in daily life than vice versa

In this survey, artificial intelligence computer programs were described as those designed to learn tasks that humans typically do, such as recognizing speech or pictures. Of course, an array of AI applications are being implemented in everything from game-playing to food growing to disease outbreak detection. Synthesis efforts now regularly chart the spread of AI.

As these developments unfold, a larger share of Americans say they are “more concerned than excited” about the increased use of AI in everyday life than say they are “more excited than concerned” about these prospects (37% vs. 18%). And nearly half (45%) say they are equally excited and concerned.

There are some differences by educational attainment and political affiliation. For instance, a larger share of those who have some college experience or a high school education or less say they are more concerned than excited, compared with their counterparts who have a bachelor’s or advanced degree (40% vs. 32%). Republicans are more likely than Democrats to say they are more concerned than excited (45% vs. 31%). Full details about the views of different groups on this question can be found in the Appendix.

When those who say they are more excited than concerned about the increased use of AI in daily life are asked to explain in their own words the main reason they feel that way, 31% said they believe AI has the ability to make key aspects of our lives and society better.

As one man explained in his written comments:

“AI, if used to its fullest ‘best’ potential, could help to solve an unbelievable number of major problems in the world and help solve massive crises like world hunger, pollution, climate change, joblessness and others.” – Man, 30s

A woman made a similar point:

“[AI has] the ability to learn and create things that humans are incapable of doing. [AI programs] will have massive impacts to our daily life and will solve issues related to climate change and healthcare.” – Woman, 30s

Smaller shares of those who express more excitement than concern over AI mention its ability to save time and make tasks more efficient (13%), see it as a reflection of inevitable progress (10%), or cite the fact that it could handle mundane or tedious tasks (7%) as the main reasons why they lean enthusiastic about the prospect of AI’s increased presence in daily life.

Those who are excited about the increased use of AI in daily life also cite AI’s ability to improve work, their sense that AI is interesting and exciting and the ability of AI programs to perform difficult or dangerous tasks as a reason: 6% of those more excited than concerned mentioned each.

In addition, 4% of those who are more excited say AI is more accurate than humans, while an identical share say they are excited because AI can make things more accessible for those who have a disability or who are older. Some 2% offer personal anecdotes of how AI has already been beneficial to their lives, and another 2% wrote that many of the fears about AI are misplaced due to what they believe to be unrealistic depictions of AI in science fiction and popular culture.

The 37% of Americans who are more concerned than excited about AI’s increasing use in daily life also mention a number of reasons behind their reticence. About one-in-five among this group (19%) express concerns that increased use of AI will result in job loss for humans. As a woman in her 70s put it:

“[AI programs] will eventually eliminate jobs. Then what will those people do to survive in life?” – Woman, 70s

Meanwhile, 16% of those who are more concerned about the increased use of AI say it could lead to privacy problems, surveillance or hacking. A woman in her 30s wrote of this concern:

“I am concerned that the increased use of artificial intelligence programs will infringe on the privacy of individuals. I feel these programs are not regulated enough and can be used to obtain information without the person knowing.” – Woman, 30s

Another 12% of these respondents are concerned about dehumanization, or the belief that human connections and qualities will be lost, while 8% each mention the potential for AI becoming too powerful or for people to misuse the technology for nefarious reasons.

Some 7% who express more concern than excitement about AI offer that it would make people overly reliant on this technology, and 6% worry about the failures and flaws of the technology.

Small shares of those who are worried about the integration of AI also mention other concerns ranging from what technology companies or the government would do with this type of technology to human biases being embedded into these computer programs to what they see as a lack of regulation or oversight of the technology and the industries that develop them.

Mixed views about some ways AI applications could develop: People are more excited about some, more concerned about others

In addition to the broad question about where people stand in terms of their general excitement or concern about AI, this survey also asked about a number of more specific possible developments in AI programs.

There are widely varying public views about six different kinds of AI applications that were included in the survey. Some prompt relatively more excitement than concern, and some generate substantial concern. For instance, 57% say they would be very or somewhat excited for AI applications that could perform household chores, but just 9% express the same level of enthusiasm for AI making important life decisions for people or knowing their thoughts and behaviors.

Nearly half (46%) would be very or somewhat excited about AI that could perform repetitive workplace tasks, compared with 26% who would be very or somewhat concerned about that. When it comes to AI that could diagnose medical problems, people are more evenly split: 40% would be at least somewhat excited and 35% would be at least somewhat concerned, while 24% say they are equally excited and concerned. More cautionary views are also evident when people are asked about AI that could handle customer service calls: 47% are very or somewhat concerned about this issue, compared with 27% who are at least somewhat excited.

It is important to note that on these issues, portions of Americans say they are equally excited and concerned about various possible AI developments. That share ranges from 16% to 27% depending on the possible development.

Some differences among groups stand out as Americans assess these various AI apps. Those with a high school education or less are more likely than those with postgraduate degrees to say they are at least somewhat concerned at the prospect that AI programs could perform repetitive workplace tasks (36% vs. 12%). Women are more likely than men to say they would be at least somewhat concerned if AI programs could diagnose medical problems (43% vs. 27%). A larger share of those ages 65 and older (82%) than of those 18 to 29 (63%) say they would be very or somewhat concerned if AI programs could make important life decisions for people.

Views of men, White adults seen as better represented than those of other groups when designing AI programs

In recent years, there have been significant revelations about and investigations into potential shortcomings of artificial intelligence programs. One of the central concerns is that AI computer systems may not factor in a diversity of perspectives, especially when it comes to gender, race and ethnicity.

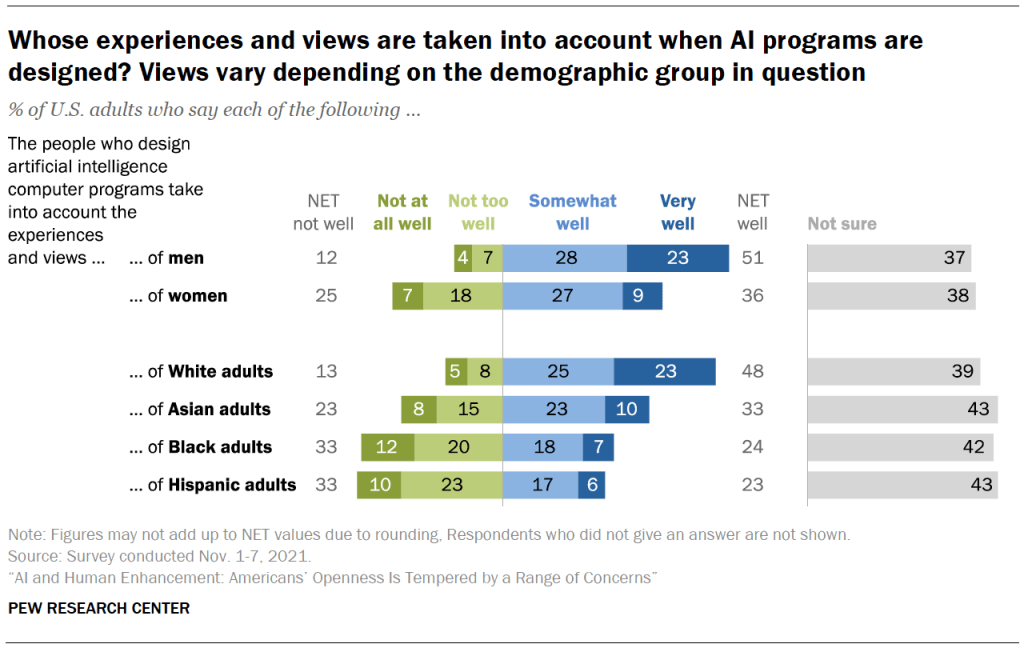

In this survey, people were asked how well they thought that those who design AI programs take into account the experiences and views of some groups. Overall, about half of Americans (51%) believe the experiences and views of men are very or somewhat well taken into account by those who design AI programs. By contrast, smaller shares feel the views of women are taken into account very or somewhat well. And while just 12% of U.S. adults say the experiences of men are not well taken into account in the design of AI programs, about twice that share say the same about the experiences and views of women.

Additionally, 48% think the views of White adults are at least somewhat well taken into account in the creation of AI programs, versus smaller shares who think the views of Asian, Black or Hispanic adults are well-represented. Just 13% feel the views and experiences of White adults are not well taken into account; 23% say the same about Asian adults and a third say this about Black or Hispanic adults.

Still, there are about four-in-ten in each case who, when asked these questions, say they are not sure how the experiences and views of different groups are taken into account as AI programs are designed.

Views on this topic vary across racial and ethnic groups:

Among White adults: They are more likely than other racial and ethnic groups to say they are “not sure” how well the designers of AI programs take into account each of the six sets of experiences and views queried in this survey. For instance, 45% of White adults say they are not sure if the experiences and views of White adults are well accounted for in the design of AI programs. That compares with 30% of Black adults, 28% of Hispanic adults and 21% of Asian adults who say they are not sure about this. Similar uncertainty among White adults appears when they are asked about other groups’ perspectives.

Among Black adults: About half of Black adults (47%) believe that the experiences and views of Black adults are not well taken into account by the people who design artificial intelligence programs, while a smaller share (24%) say Black adults’ experiences are well taken into account. Compared with Black adults, a similar share of Asian adults (39%) feel the experiences and views of Black adults are not well taken into account when AI programs are designed, while Hispanic adults (35%) and White adults (29%) are less likely than Black adults to hold this view.

Among Hispanic adults: About one-third of Hispanic Americans (34%) believe the experiences and views of Hispanic adults are well taken into account as the programs are designed. This is the highest share among the groups in the survey: 24% of Asian adults, 22% of Black adults and 21% of White adults feel this way. Meanwhile, 36% of Hispanic adults say the experiences and views of Hispanic adults are not well taken into account as AI programs are designed. About three-in-ten Hispanic adults (29%) say they are not sure on this question.

Among Asian adults: Some 41% of Asian adults think that the experiences of Asian adults are well taken into account. Similar shares of Hispanic adults (42%) and Black adults (36%) say this about Asians’ views, versus a smaller share of White adults (29%) who think that is the case.

A plurality of Americans are not sure whether AI can be fairly designed

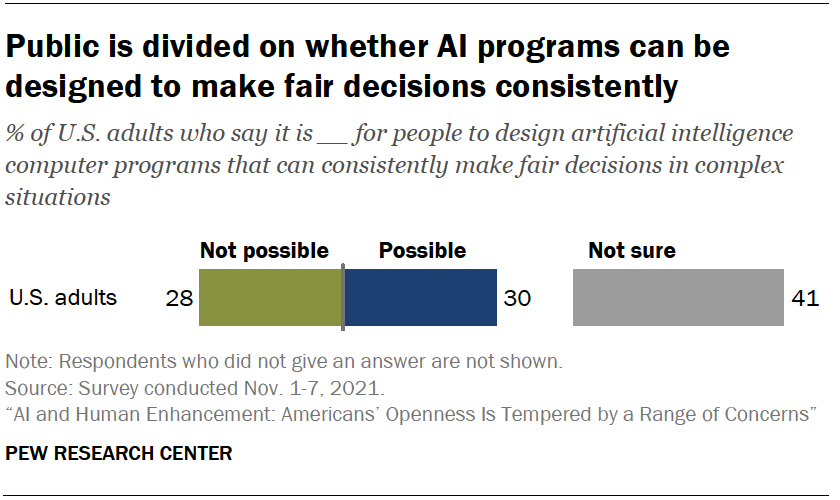

In addition to gathering opinion on how well various perspectives are taken into account, the survey explored how people judge AI programs when it comes to fair decisions. Asked if it is possible for the people who design AI to create computer programs that can consistently make fair decisions in complex situations, Americans are divided: 30% say AI design for fair decisions is possible, 28% say it is not possible, while the largest share – 41% – say they are not sure.

Some noteworthy differences among different groups on this question are tied to gender. Men are more likely than women to believe it is possible to design AI programs that can consistently make fair decisions (38% vs. 22%), and women are more likely to say they are not sure (46% vs. 35%).