By Kyley McGeeney and H. Yanna Yan

Text messaging has grown in popularity in recent years, leading survey researchers to explore ways texts might be used as tools in the public opinion research process. In the U.S., at least, researchers must obtain consent from respondents before they are permitted to send an automated text. This means that text messaging can’t be used in standard one-off surveys of the public – surveys where pollsters reach out to a randomly sampled list of telephone numbers. Texting also presents measurement challenges in terms of offering only limited space for writing questions and requiring respondents to type rather than click. While these factors limit the utility of texting for interviewing itself, texting has been explored as a means of alerting people to complete a survey, such as by sending them a link to a web survey.1

A new study by Pew Research Center found that sending notifications via text to consenting survey panel members improves response time (people take the survey sooner, on average) and boosts the share of respondents completing the survey on a mobile device. It does not, however, increase the ultimate response rate over a longer field period compared with sending notifications by email only.

These results come from two experiments conducted by Pew Research Center using its American Trends Panel (ATP), a probability-based, nationally representative group of people who have agreed to take multiple surveys and receive text messages about them. The first experiment examined the effect of sending a text as a first notification to take a survey. The second experiment examined using text messages to remind people to take a survey. These messages were sent several days after the initial notification.

In the first experiment, web panelists who had previously consented to receiving text messages (2,109 out of 3,634 web panelists in total) were randomized into one of two groups: one group received survey invitations via email and the other received them via both text message and email.2

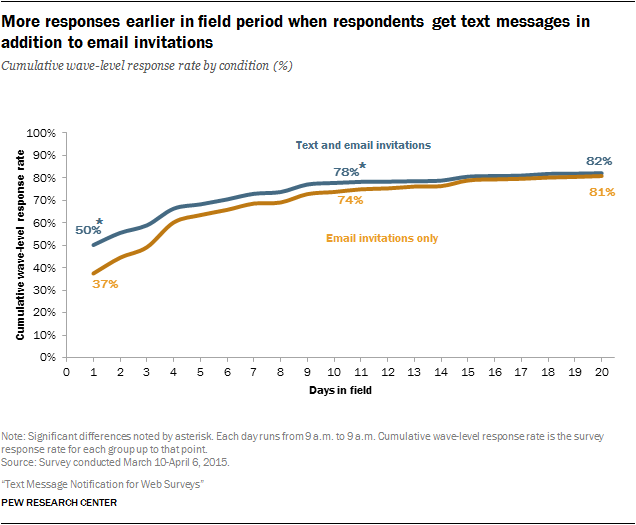

On average, panelists in the email and text group completed the survey earlier than those in the email-only group. This difference was most dramatic at the beginning of the survey field period. By the end of the first full day, half (50%) of the email and text group had completed the survey, compared with 37% of the email-only group.

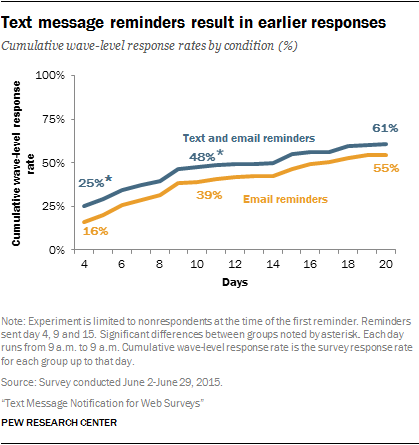

In the second experiment, all web panelists who had consented to text messages were sent survey invitations via email and text message. Those who hadn’t responded after three days were then randomized into one of two groups: One group only received reminders via email and the other received reminders via email and text3.

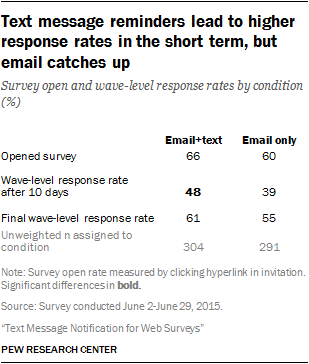

Again, on average, panelists in the text and email reminder group responded earlier than panelists in the email-only reminder group. By the 10th day, the text and email reminder group had a wave-level response rate of 48% vs. 39% in the email-only reminder group. However, there was no significant difference in the final wave-level response rate by the end of the field period.

This report examines the response patterns and demographic composition of respondents in each group for the two experiments. It also looks more broadly at who in the panel consented to receiving this type of survey text message.

Text messages produce earlier responses but no difference in final response rate

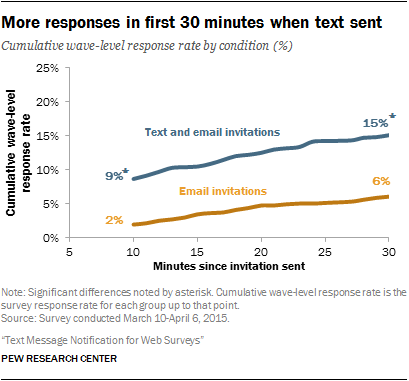

When panelists received invitations via text message and email, they completed the survey earlier in the field period than those who received only email. In fact, in the first 30 minutes after the survey invitations were sent, 15% of the text message and email group had responded to the survey vs. only 6% of the email-only group. This has important implications for survey researchers who need to collect data in a short amount of time.

By the end of the first full day in the field, half of the panelists (50%) in the text and email group had responded vs. only 37% of the email-only group. By the end of the third day, 59% of the text and email group had responded vs. 49% of the email-only group. The higher wave-level response rate in the text and email group continued through the 10th day in the field.

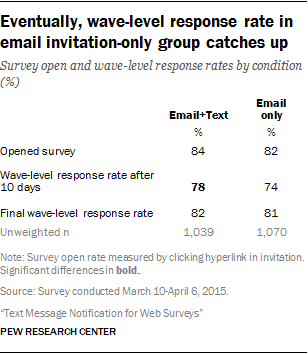

However, given enough time in the field, the email-only group’s response rate eventually catches up with that of the email and text group. By the end of the 20-day field period, there was no difference between the two groups in terms of the percent who opened the survey or the percent who responded.

In total, 84% of the email and text group opened the survey vs. 82% of the email-only group, which led to an 82% and 81% response rate, respectively. The 84% of text and email panelists who opened the survey consisted of 54% who opened the survey from the email link and 30% who opened using the link in the text message, as they had the option to use either link.

It’s important to note that had the field period been shorter, as is typical in other web surveys, the final response rate would have been higher for the text and email group. For instance, 10 days into the field period, 78% of the text and email group had responded compared with only 74% of the email group. After 10 days the difference between the two groups narrowed to just 1 percentage point.

Text messages lead to more interviews completed on a smartphone

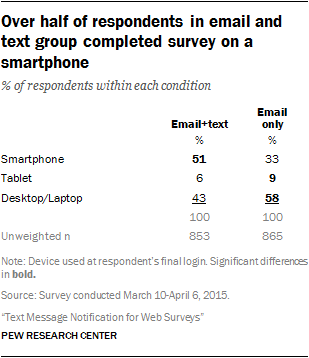

Not surprisingly, sending invitations via text message encourages respondents to complete the survey on a smartphone.

Within the email and text group, about half (51%) of respondents completed the survey on a smartphone, compared with only a third (33%) of respondents in the email-only group, despite similar rates of smartphone ownership in the two groups.4

Taken together with tablets, 57% of respondents in the text and email group completed the survey using a mobile device, compared with only 42% of the email-only group. For survey researchers who want to leverage features of smartphones in their studies, such as capturing GPS (with appropriate consent) or asking respondents to take pictures, this could be quite helpful.

On the other hand, certain types of surveys are better suited for completion on a desktop or laptop computer, such as very long surveys or those using software that is not optimized for smartphones. For these types of surveys, encouraging completion on a mobile device could lead to data quality issues or higher break-off rates.

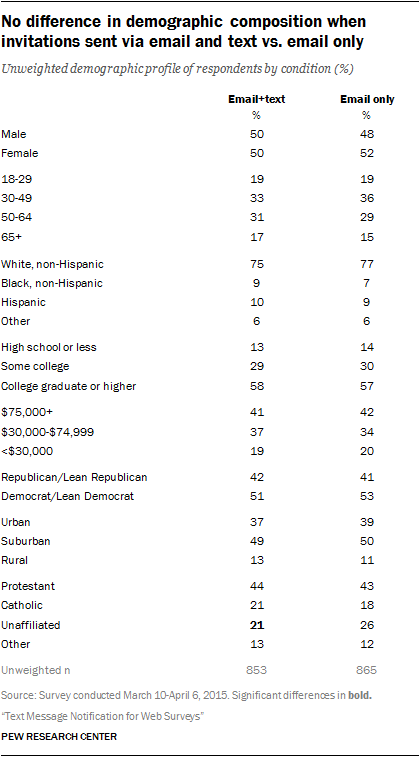

Text message invitations did not distort respondent demographic composition

Demographically, the respondents in the text message and email group were virtually indistinguishable from respondents in the email-only group. There were no differences between the two groups in terms of sex, age, race/ethnicity, education, income, party affiliation or urbanicity. The only difference found was that the email-only group was somewhat more likely to be religiously unaffiliated (26%) than the text message and email group (21%). Even limiting the analysis to respondents from the first 10 days still produces no demographic difference between the two groups apart from religious affiliation.

This lack of demographic difference across the two sets of respondents is encouraging, as there is evidence that texting is more popular among certain demographic groups than others, such as among younger adults or those with higher education. The experiment suggests that these differences in who texts did not result in differences in who responded to the panel survey when text invitations were used. This may be due in part to the fact that in both experiments all respondents received email invitations. If, by contrast, respondents were only allowed to access the survey via the link from the text, then the effect on the demographic profile of the responding sample may have been more noticeable.

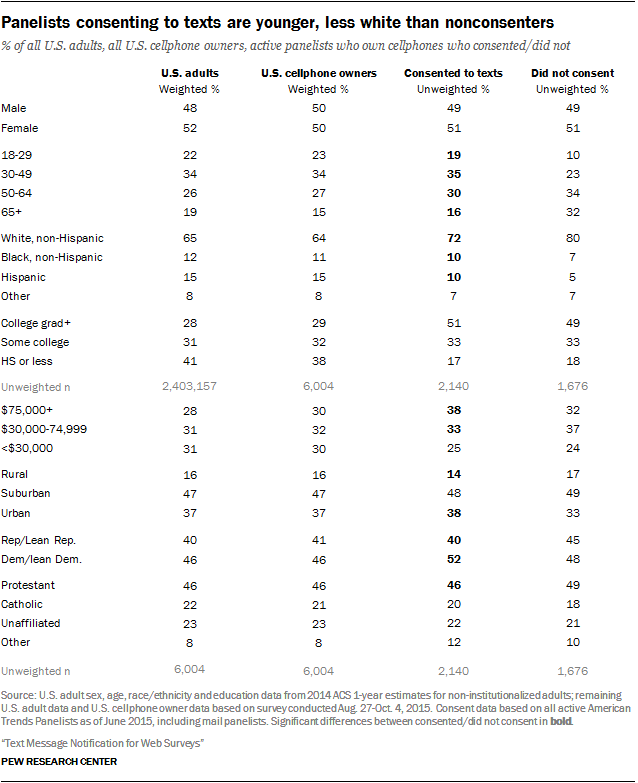

Not all panelists consent to text messages; those who do are demographically distinct

[According to the Centers for Disease Control and Prevention’s National Health Interview Survey, 91% of adults own cellphones. When last measured by Pew Research Center in 2013, 81% of cellphone owners used text messaging. Taken together, at least 74% of U.S. adults use text messaging. Given the rise in text messaging over time, this is likely an underestimate.]

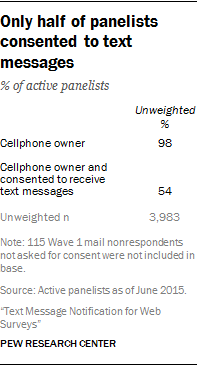

Under federal law, researchers need prior consent in order to send automated text messages to potential respondents. Text messaging was possible in this panel study because consent was obtained from panelists prior to this study being conducted.

Those who consented to receiving survey text messages tend to be younger and higher income than those who didn’t consent. Consenters are more likely to live in urban areas and to be Democrats. They are less likely to be white or to be Protestant. However, there is no difference between the consenter and nonconsenters in sex or education.

Additionally, 5% of panelists consented to receiving text messages but could not receive Pew Research Center text messages because ATP texts are sent using short codes, which the panelists had blocked. Short codes are five- to six-digit phone numbers that companies use to, among other things, send automated text messages to a large set of mobile telephone numbers more quickly than if using a traditional 10-digit phone number (long code). Some cellphone carriers, device manufacturers and/or individuals choose to block text messages sent from short codes.

All in all, only 49% of web panelists could receive survey text messages because they owned a cellphone, consented to receiving text messages and did not have short codes blocked.5 Pew Research Center uses short codes due to the volume of texts being sent and the speed efficiencies, but using a long code would allow researchers to reach these additional panelists.

Reminder texts result in earlier responses, but not higher overall wave-level response rate

In the second experiment, all consenting respondents received text message and email invitations to their surveys. Those who had not responded by the third day were randomized into either the treatment group, which received text and email reminders, or the control group, which received only email reminders. Reminders were sent on days 4, 9, and 15 of the field period.

The text message reminders resulted in earlier responses, although by day 11 the control group had caught up and was no longer statistically significantly lower than the treatment group. By the end of the 20-day field period there was no statistically significant difference in the final open or response rates between the treatment and control group. That said, the 20-day field period used in the ATP is longer than that of most public opinion surveys. If the field period had been 10 days, for example, the text message reminders would have resulted in a significantly higher final wave-level response rate.

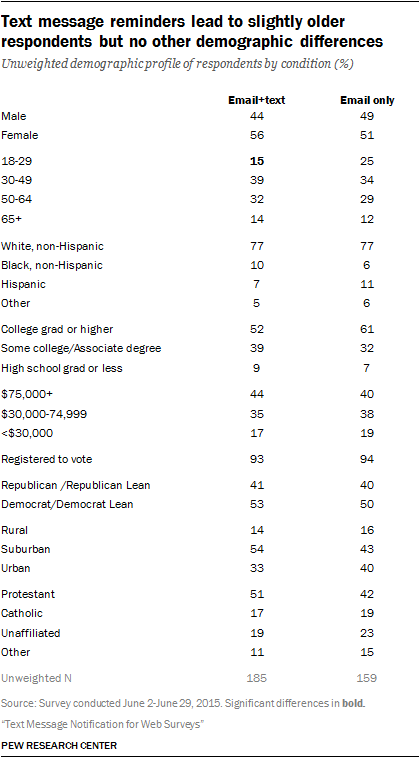

Reminder text messages skewed responding sample slightly older

The respondents who received only email reminders but still answered the survey were younger than the respondents who received both text and email reminders. Of the email-only group, one quarter (25%) were ages 18 to 29 versus only 15% of the text and email group. This was unexpected because of the popularity of texting among this age group. One possible explanation is that younger people use text messaging more than older adults, so the text message reminders may have been less novel. There were no other differences in demographics between the two groups of respondents.

Text messages are now standard American Trends Panel protocol

Based on the results of these experiments, consensual text message invitations and reminders are now standard protocol for the American Trends Panel. The next step is to explore using consensual text messages to collect the survey responses themselves, rather than just using texts to send links to web surveys.