Who Are You? The Art and Science of Measuring Identity

As a shop that studies human behavior through surveys and other social scientific techniques, we have a good line of sight into the contradictory nature of human preferences. Today, we’re calling out one of those that affects us as pollsters: categorizing our survey participants in ways that enhance our understanding of how people think and behave.

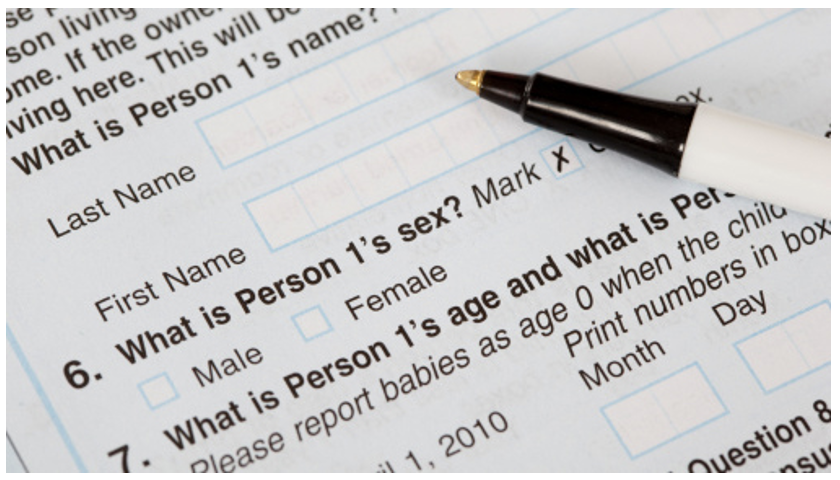

Here’s the tension: On the one hand, many humans really like to group other humans into categories. Think, “Women are more likely to vote Democratic and men to vote Republican.” It helps us get a handle on big, messy trends in societal thought. To get this info, surveys need to ask each respondent how they would describe themselves.

On the other hand, most of us as individuals don’t like being put into these categories. “I’m more than my gender! And I’m not really a Republican, though I do always vote for them.” On top of that, many don’t like being asked nosy questions about sensitive topics. A list of the common demographic questions at the end of a survey can basically serve as a list of things not to raise at Thanksgiving dinner.

But our readers want to see themselves in our reports, and they want to know what people who are like them – and unlike them – think. To do that, it’s helpful for us to categorize people.

Which traits do we ask about, and why?

Unlike most Pew Research Center reports, where the emphasis is on original research and the presentation of findings, our goal here is to explain how we do this – that is, how we measure some of the most important core characteristics of the public, which we then use to describe Americans and talk about their opinions and behaviors.

To do so, we first chose what we judged to be the most important personal characteristics and identities for comparing people who take part in our surveys. Then, for each trait, we looked at a range of aspects: why and how it came to be important to survey research; how its measurement has evolved over time; what challenges exist to the accurate measurement of each; and what controversies, if any, remain over its measurement.

These considerations and more shape how we at Pew Research Center measure several important personal characteristics and identities in our surveys of the U.S. public. Here are some things to know about key demographic questions we ask:

- Our main religion question asks respondents to choose from 11 groups that encompass 97% of the U.S. public: eight religious groups and three categories of people who don’t affiliate with a religion. Other, less common faiths are measured by respondents writing in their answer. Our questions have evolved in response to a rise in the share of Americans who do not identify with any religion and to the growing diversity in the country’s population. (Chapter 1)

- Measuring income is challenging because it is both sensitive and sometimes difficult for respondents to estimate. We ask for a person’s “total family income” the previous calendar year from all sources before taxes, in part because that may correspond roughly to what a family computed for filing income taxes. To reduce the burden, we present ranges (e.g., “$30,000 to less than $40,000”) rather than asking for a specific number. (Chapter 2)

- We ask about political party affiliation using a two-part question. People who initially identify as an independent or “something else” (instead of as a Republican or Democrat) and those who refuse to answer receive a follow-up question asking whether they lean more to the Republican Party or the Democratic Party. In many of their attitudes and behaviors, those who only lean to a party greatly resemble those who identify with it. (Chapter 3)

- Our gender question tries to use terminology that is easily understood. It asks, “Do you describe yourself as a man, a woman or in some other way?” Amid national conversation on the subjects, gender and sexual orientation are topics on the cutting edge of survey measurement. (Chapter 4)

- In part because we use U.S. Census Bureau estimates to statistically adjust our data, we ask about race and Hispanic ethnicity separately, just as the census does. People can select all races that apply to them. In the future, the census may combine race and ethnicity into one question. (Chapter 5)

- A person’s age tells us both where they fall in the life span, indicating what social roles and responsibilities they may have, and what era or generation they belong to, which may tell us what events in history had an effect on their political or social thinking. We typically ask people to report just the year of their birth, which is less intrusive than their exact date of birth. (Chapter 6)

Each of these presents interesting challenges and choices. While there are widely accepted best practices for some, polling professionals disagree about how most effectively to measure many characteristics and identities. Complicating the effort is that some people rebel against the very idea of being categorized and think the effort to measure some of these dimensions is divisive.

It’s important that our surveys accurately represent the public

In addition to being able to describe opinions using characteristics like race, sex and education, it’s important to measure these traits for another reason: We can use them to make sure our samples are representative of the population. That’s because most of them are also measured in large, high-quality U.S. Census Bureau surveys that produce trustworthy national statistics. We can make sure that the makeup of our samples – what share are high school graduates, or are ages 65 or older, or identify as Hispanic, and so on – match benchmarks established by the Census Bureau. To do this, we use a tool called weighting to adjust our samples mathematically.

Some of the characteristics we’ll talk about are not measured by the government: notably, religion and party affiliation. We’ve developed an alternative way of coming up with trustworthy estimates for those characteristics – our National Public Opinion Reference Survey, which we conduct annually for use in weighting our samples.

You are who you say you are – usually

We mostly follow the rule that “you are who you say you are,” meaning we place people into whichever categories they say they are in. But that was not always true in survey research for some kinds of characteristics. Through 1950, enumerators for the U.S. census typically coded a person’s race by observation, not by asking. And pollsters using telephone surveys used respondents’ voices and other cues in the interview to identify their gender, rather than by asking them.

Nowadays, we typically ask. We still make judgments that sometimes end up placing a person in a different category than the one in which they originally placed themselves. For example, when we group people by religion, we use some categories that are not familiar to everyone, such as “mainline Protestant” for a set of denominations that includes the Episcopal Church, the United Methodist Church, the Presbyterian Church (U.S.A.) and others.

And we sometimes use respondents’ answers to categorize them in ways that go beyond what a single question can capture – such as when we use a combination of family income, household size and geographic location to classify people as living in an upper-, middle- or lower-income household.

Nosy but necessary questions

As much as people enjoy hearing about people like themselves, some find these types of personal questions intrusive or rude. The advice columnist Judith Martin, writing under the name Miss Manners, once provided a list of topics that “polite people do not bring into social conversation.” It included “sex, religion, politics, money, illness” and many, many more. Obviously, pollsters have to ask about many of these if we are to describe the views of different kinds of people (at Pew Research Center, we at least occasionally ask about all of these). But as a profession, we have an obligation to do so in a respectful and transparent manner and to carefully protect the confidentiality of the responses we receive.

If you’ve participated in a survey, it’s likely that the demographic questions came at the end. Partly out of concern that people might quit the survey prematurely in reaction to the questions, pollsters typically place these questions last because they are sensitive for some people and boring for most. Like other organizations that use survey panels – collections of people who have agreed to take surveys on a regular basis and are compensated for their participation – we benefit from a high level of trust that builds up over months or years of frequent surveys. This is reflected in the fact that we have fewer people refusing to answer our question about family income (about 5%) than is typical for surveys that ask about that sensitive topic. Historically, in the individual telephone surveys we conducted before we created the online American Trends Panel, 10% or more of respondents refused to disclose their family income.

One other nice benefit of a survey panel, as opposed to one-off surveys (which interview a sample of people just one time) is that we don’t have to subject people to demographic questions as frequently. In a one-off survey, we have to ask about any and all personal characteristics we need for the analysis. Those take up precious questionnaire space and potentially annoy respondents. In our panel, we ask most of these questions just once per year, since we are interviewing the same people regularly and most of these characteristics do not change very much.

Speaking of questions that Miss Manners might avoid, let’s jump into the deep end: measuring religion. (Or choose your own adventure by clicking on the menu.)

Choose a demographic category

Acknowledgments

This essay was written by Scott Keeter, Anna Brown and Dana Popky with the support of the U.S. Survey Methods team at Pew Research Center. Several others provided helpful comments and input on this study, including Tanya Arditi, Nida Asheer, Achsah Callahan, Alan Cooperman, Claudia Deane, Carroll Doherty, Rachel Drian, Juliana Horowitz, Courtney Kennedy, Jocelyn Kiley, Hannah Klein, Mark Hugo Lopez, Kim Parker, Jeff Passel, Julia O’Hanlon, Baxter Oliphant, Maya Pottiger, Talia Price and Greg Smith. Graphics were created by Bill Webster, developed by Nick Zanetti and produced by Sara Atske. Anna Jackson offered extensive copy editing of the finished product. See full acknowledgments.

All of the illustrations and photos are from Getty Images.

Find related content about our survey practices and methodological research.

CORRECTION (February 20, 2024): An earlier version of this data essay misstated the share of Americans whose religion falls under the 11 main categories offered in the religious affiliation question. The correct share is 97%. This has now been updated.