The Pew Research Center often receives questions from visitors to our site and users of our studies about our findings and how the research behind them is carried out. In this feature, senior research staff answers questions relating to the areas covered by our seven projects ranging from polling techniques and findings, to media, technology, religious, demographic and global attitudes trends. We can’t promise to respond to all the questions that we receive from you, our readers, but we will try to provide answers to the most frequently received inquiries as well as to those that raise issues of particular interest.

If you have a question related to our work, please send it to info@pewresearch.org.

Jump to a question:

- Why does my political party identification affect my political typology classification?

- Are views of marriage affected by the type of family people grew up in?

- How big a proportion of the American public is the Tea Party?

- Do people lie to pollsters about their physical characteristics?

- Do people worry about marriage becoming obsolete?

- How do you decide what to ask in your polls?

- If there are now fewer unauthorized immigrants in the U.S. where did they go?

- Does the Census double count “snowbirds”?

- Why don’t you call old people “seniors?”

- How many Christians are there in Egypt?

- To what degree does my having only a cell phone restrict my availability for surveys?

- Isn’t your religion quiz wrong about when the Sabbath begins?

- Your online news quiz says defense is the biggest item in the budget. That right?

- How can I tell if an “average” is a mean or a median?

- How accurate are the statistics derived from Pew Research polls?

- How accurate are online polls?

- How many people would say that they believed in God if they were able to answer with complete anonymity?

- What kind of response do you get to mail versus phone versus e-mail polling?

- Should I take polls about the 2012 election seriously two years before the election?

- I’m BOTH Jewish and an atheist. How would you classify me in your reports?

- What do other countries think about climate change?

- Why should we care what people think when so many are so dumb?

- What do you mean by “continental U.S.”?

And for even more “Ask the Expert” questions click here.

Q. I just took your typology quiz and found that changing my answer to just one question — my party identification — placed me into a different group. Why does my party identification affect my group assignment?

The political typology aims to put partisan dynamics in the context of broader political values and beliefs — to recognize the importance of partisanship, but go beyond a binary “Red vs. Blue” view of the public, looking at differences both within and across the parties. Throughout the project’s history, party identification has always been a core component of the typology; it is strongly associated with political behaviors (past, present and future), and people with similar attitudes across a host of issues who seemingly differ only in their partisanship may make different political choices. Put differently, actively choosing to identify with the Republican (or Democratic) party, rather than as an independent “leaning” to that party has political meaning.

Your typology group might shift with a change in your party identification, as it might if you changed a response to any question used in the typology. This is particularly the case for people who express a combination of beliefs that either don’t fit tightly within any specific group, or might overlap with one or more groups. In effect, the statistical process is looking for where there are centers-of-gravity in the public, but there will always be many people with views that set them apart. It is particularly for these people where changing a response to a single question (including party) might make the difference in determining the assignment. Other people are more clearly a good fit with only one group, so their assignments are less likely to change with a single response change.

For more on how we determine typology group assignments, both in the survey and in responses to the quiz see “How We Identified Your Group” at pewresearch.org/pewresearch-org/politics.

Michael Dimock, Associate Director, Research, Pew Research Center for the People & the Press

Q. Do you find in your surveys that the kind of family that people say they were raised in (happily married parents, unhappily married, divorced, single parent, etc.) correlates with their current views of marriage?

Yes and no. When we did a big survey last year on attitudes about marriage and family life, we asked respondents to tell us their parents’ marital status during most of the time they were growing up; 77% said their parents were married, 13% said divorced, 2% separated, 2% widowed and 5% never married. When we cross that family history with responses to questions about marriage, we see a mixed pattern. For example, our survey found that 39% of all respondents agree that marriage is becoming obsolete — including virtually identical shares of respondents whose parents were married (38%) and respondents whose parents were not married for whatever reason (39%). But on other questions, there’s a gap in attitudes between those groups. For example, among respondents whose parents were married while they were growing up, 76% say they are very satisfied with their family life; among respondents whose parents were not married for whatever reason, just 70% say the same. Not surprisingly, there’s a much bigger gap when we asked married respondents if their relationship with their spouse is closer or less close than the relationship their parents had. Among those whose parents were not married, 69% say their spousal relationship is closer, 24% say about the same and just 5% say less close. Among those whose parents were married, just 46% say closer, 48% say about the same and 5% say less close.

Paul Taylor, Director, Pew Research Center Social & Demographic Trends project

Q. The Tea Party, its adherents and its agenda, get a great deal of attention in the media today. How big a proportion of the American public is the Tea Party?

Since the Tea Party appeared on the political scene in 2009, the Pew Research Center and other pollsters have tracked the public’s reaction to the movement. In our most recent survey, conducted March 30-April 3, 22% of the public said they agreed with the Tea Party movement, while 29% said they disagreed with it; 49% said they had no opinion either way or had not heard of it. Throughout our election polling in 2010, Tea Party supporters were closely following the campaign and were much more likely than the average American to say they intended to vote in the November elections. And vote they did: Exit polling by the National Election Pool found that fully 41% of voters said they supported the Tea Party movement.

Previous Pew Research studies have provided an in-depth look at Tea Party supporters and their views, including analyses of their opinions on government spending, government power, and religion and social issues.

Scott Keeter, Director of Survey Research, Pew Research Center

Q: People fudge details of their appearance for personal ads and dating websites. Are they honest about their physical characteristics in surveys?

Apparently not. Whether they are consciously lying is unclear — they may be trying to fool themselves as well as survey researchers — but it appears that many people underestimate their weight when answering questions over the phone. Multiple studies by health researchers have found, for example, that the average respondent to a telephone survey reports a lower weight than the average respondent to a face-to-face survey in which the person is weighed on a scale. The average phone respondent also reports being taller than the average person who participates in a study involving direct measurements of height. Using the standard Body Mass Index (BMI) classification, phone respondents are less likely to be categorized as obese or overweight than are people measured in person.

The Pew Research Center described such underestimates and overestimates of physical characteristics in its April 2006 study of weight problems. The average woman in our phone survey reported a weight five pounds lower than the average woman’s directly measured weight in a U.S. government health study. The average man in our phone survey claimed to be two inches taller than the average man directly measured in the government study. Consequently, when we fed our respondents’ information into a BMI calculator, we found that only 19% were classified as obese, while 31% of respondents in the government study were classified as obese.

The prevalence and consequences of obesity are important public health issues. Because of the discrepancies between self-reports and direct measures of weight and height, governments often choose to study such characteristics directly rather than relying on self-reports, which are less expensive to collect.

You can find more information about these measurement differences in this review article, although a subscription or a one-time fee is required in order to access it.

Conrad Hackett, Demographer, Pew Forum on Religion & Public Life

Q. One of your recent reports found that 39% of Americans agree that marriage is becoming obsolete. What percent of the sample showed any concern about that trend?

Sometimes after we field a survey and see the results, we kick ourselves for failing to ask an obvious question. This is one of those cases. In retrospect, we should have followed up the “Is marriage becoming obsolete?” question with: “And do you think that’s a good thing for society, a bad thing, or does it make no difference?” We didn’t. But we did use that question wording elsewhere in the same survey when we asked about several marriage-related trends. For example, 43% of our respondents said that the trend toward more people living together without getting married is bad for society, 9% said it is good and a plurality — 46% — said it makes no difference. We also did a follow-up report that used a statistical technique known as cluster analysis to show that the public is divided into three roughly equal segments — accepters, rejecters and skeptics — in the way they judge a range of trends related to changes in marriage and family structure.

Paul Taylor, Director, Pew Research Center Social & Demographic Trends project

Q. How do you decide what questions to ask in your polls?

The subjects that we decide to cover in each of our surveys — and the particular questions we ask about them — are arrived at in a number of ways.

One important factor is, of course, what is happening in the nation and the world. We aim to cover the news. We try to do the best job we can to measure the opinions and attitudes of the public. We focus on those aspects of opinion that are key to press, politics and policy debates. And as part of as those assessments we want also to examine what people know about current events, to test what news they follow and what facts they absorb from it. To the extent possible, we want to do all that in real time — while the issues involved are still on the front burner.

A second source of subjects is updating the major data bases we have established over time, knowing that shifts in trends are often as important — or even more important — than the particular attitudes expressed at a point in time. These include our periodic updates on political values, opinions about the press, about religion and politics, views of “America’s Place in the World” as well as media behavior and political engagement including voter participation. And, of course, there are our much watched Pew Global Attitudes surveys that examine trends in attitudes of populations around the world both with regard to the United States and with respect to happenings in their own countries and those in the larger world.

The third source is suggestions that strike us as important, some of them coming from our own staff, from other researchers and from those of you who follow our research and findings. These include our studies of various social phenomena such as the attitudes and behaviors of the Millennial generation, trends in racial attitudes and opinions, attitudes toward science and trust in government and the characteristics and opinions of Muslim Americans.

In all these areas we try, where possible, to reproduce not only the exact wording of questions we have asked in the past, but also the ordering of key questions. That’s because the context in which a question is asked can often significantly influence responses.

Andrew Kohut, President, Pew Research Center

Q. Your recent report indicated that in 2009 and 2010 there were many fewer undocumented immigrants in the United States than in earlier years. Is there any information on where these folks went?

Various factors could account for the decline in the number of unauthorized immigrants in the U.S. in recent years. First, the balance of immigrant flows in and out of the U.S. could have changed, so that the number of newly arriving unauthorized immigrants could be smaller than it used to be, or the number who are leaving could be larger. Second, some unauthorized immigrants could have converted their status by obtaining U.S. citizenship or other legal authorization.

We do have one important clue in trying to determine why the overall numbers declined. The decline in the population of unauthorized immigrants, from 12 million in 2007 to 11.2 million in 2010, is due mainly to a decline in the population of unauthorized immigrants from Mexico. Their numbers declined from 7 million in 2007 to 6.5 million in 2010, although Mexicans still make up the bulk of unauthorized immigrants (58%). Also, analysis of Mexican government data shows that the number of immigrants returning to Mexico each year has declined slightly — about 480,000 Mexicans returned home in 2006 compared with 370,000 in 2009. However, these same data sources suggest that the number of Mexicans migrating to the U.S. (or other countries) fell by almost half from just over 1 million in 2006 to about 515,000 in 2009.

Jeffrey S. Passel, Senior Demographer, Pew Hispanic Center

Q.In the 2010 census, was proper care taken to record Americans only once? Many of us have winter homes in the South, and there are also houses owned by Canadians who were still in residence down here on April 1, but probably shouldn’t be counted since they are visitors.

Census Bureau rules state that people are to be counted in their “usual residence” as of April 1, meaning the place where they live and sleep most of the time. After the 2000 Census, analysis by the Census Bureau suggested that the U.S. population may have been over-counted for the first time in history because of the many people who were tallied more than once. Because of the many duplicate enumerations resulting from people who were counted in two different homes (“households,” in census parlance), the Census Bureau invested extra effort and publicity in trying to avoid this type of error in the 2010 Census. Snowbirds — people who live in cold states or other countries for most of the year, but move to warm states for the winter — were one focus of this effort.

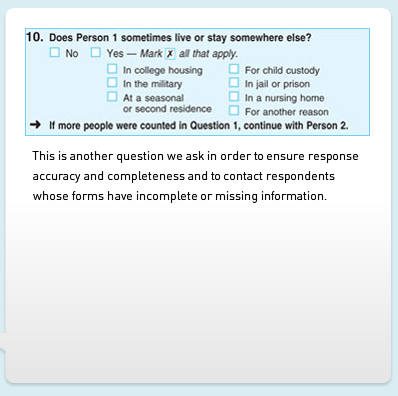

One major step the bureau took was to add a screening question to the 2010 Census form, asking whether each person in the household sometimes lives or stays somewhere else; if the answer was yes, the form included individual check-boxes for “in college housing,” “in the military,” “at a seasonal or second residence,” “for child custody,” “in jail or prison,” “in a nursing home” and “for another reason.” Census Bureau staff planned to contact households for follow-up if the screening question raised a red flag.

Here’s the question as it appears on the interactive version of the 2010 census form.

D’Vera Cohn, Senior Writer, Pew Research Center

Q. In your 2009 report “Growing Old in America,” the words “older adult” and “middle-aged” are used along with age groups (e.g., respondents ages 65 and above). In other Pew Research reports, age cohorts are named as Baby Boomer, Silent Generation and so forth. Why does the Pew Research Center use these terms for older adults, instead of the words “senior” or “elderly”?

Your examples refer to two different kinds of studies. In some reports, we present data on age cohorts, also called generations. These are groups of people who share a specific set of birth years. The Baby Boomers are perhaps the best known example of an age cohort. This is a generation born in the years following World War II (typically limited to 1946-1964). Another generation we have reported on is the Millennials, the country’s youngest adults (we define them as adults born after 1980). Our typical generational analysis will compare the attitudes and characteristics of a generation not only with other generations but also with themselves using data from surveys conducted years earlier.

In other reports, we are simply comparing different age groups at one point in time. In these reports, we use labels commonly associated with the stage of life in which the group is located. Admittedly, we aren’t fully consistent in how we label different age groups, occasionally using “seniors” interchangeably with “older adults” and other synonyms. We do try very hard to avoid the use of the term “elderly,” since many people find the term objectionable, and there is little agreement on how old one must be to be elderly.

Scott Keeter, Director of Survey Research, Pew Research Center

Q: I keep hearing different estimates being cited about how many Christians there are in Egypt. What are the facts?

A: The numbers are debated. Media reports, sometimes citing officials of the Coptic Orthodox Church, frequently say that Christians make up 10% or more of the country’s approximately 80 million people. But researchers at the Pew Forum on Religion & Public Life have been unable to find any Egyptian census or large-scale survey that substantiates such claims.

The highest share reported in the past century was in 1927, when the census found that 8.3% of Egyptians were Christians. In each of seven subsequent censuses, the Christian share of the population gradually shrank, ending at 5.7% in 1996. Religion data has not been made available from Egypt’s most recent census, conducted in 2006. But in a large, nationally representative 2008 survey — the Egyptian Demographic and Health Survey, conducted among 16,527 women ages 15 to 49 — about 5% of the respondents were Christian. Thus, the best available census and survey data indicate that Christians now number roughly 5% of the Egyptian population, or about 4 million people. The Pew Forum’s recent report on The Future of the Global Muslim Population estimated that approximately 95% of Egyptians were Muslims in 2010.

Of course, it is possible that Christians in Egypt have been undercounted in censuses and demographic surveys. According to the Pew Forum’s analysis of Global Restrictions on Religion, Egypt has very high government restrictions on religion as well as high social hostilities involving religion. (Most recently, a bombing outside a church in Alexandria during a New Year’s Eve Mass killed 23 people and wounded more than 90.) These factors may lead some Christians, particularly converts from Islam, to be cautious about revealing their identity. Government records may also undercount Christians. According to news reports, for example, some Egyptian Christians have complained that they are listed on official identity cards as Muslims.

Even if they are undercounts, the census and survey data suggest that Christians have been steadily declining as a proportion of Egypt’s population in recent decades. One reason is that Christian fertility has been lower than Muslim fertility — that is, Christians have been having fewer babies per woman than Muslims in Egypt. Conversion to Islam may also be a factor, though reliable data on conversion rates are lacking. It is possible that Christians have left the country in disproportionate numbers, but ongoing efforts by the Pew Forum to tally the religious affiliation of migrants around the world have not found evidence of an especially large Egyptian Christian diaspora. For example, in the United States, Canada and Australia, the majority of Egyptian-born residents are Christian, but the estimated total size of the Egyptian-born Christian populations in these countries is approximately 160,000. In contrast, there are more than 2 million Egyptian-born people living in Saudi Arabia, Kuwait and the United Arab Emirates, the overwhelming majority of whom are likely to be Muslims.

Most, but not all, Christians in Egypt belong to the Coptic Orthodox Church of Alexandria. Minority Christian groups include the Coptic Catholic Church and assorted Protestant churches.

Here are some sources you might want to consult for further information on the subject:

- Egyptian Demographic and Health Survey

- Campo, Juan E. and John Iskander (2006), “The Coptic Community” in The Oxford Handbook of Global Religions, edited by Mark Juergensmeyer.

- Courbage, Youssef and Philippe Fargues (1997), Christians and Jews under Islam, I.B. Tauris & Co. Translated by Judy Mabro.

- Ambrosetti, Elana and Nahid Kamal (2008), “The Relationship between Religion and Fertility: The Case of Bangladesh and Egypt.” Paper presented at the 2008 European Population Conference.

- Egypt population

- World Religion Database, historical Egyptian census data.

- Integrated Public Use Microdata Series International, 1996 Egyptian census data

Conrad Hackett, Demographer, Pew Forum on Religion & Public Life

Q. When I was living in Oregon and had a landline telephone with a listed number, I occasionally received various requests to participate in surveys. I now live in Connecticut and have only a cell phone (with no directory listing that I know of) and have never received a survey inquiry. To what degree does my having only a cell phone restrict my availability for surveys? And does living in a state with greater population density also mean that I would less often be the subject of survey requests?

Having a cell phone rather than a landline does make it less likely that you will receive an interview request by phone. There are a couple of reasons for this. Although the Pew Research Center now routinely includes cell phone numbers in our samples, many survey organizations still do not do so. In addition, most surveys that include cell phones conduct more landline than cell phone interviews. Of course, if you have both a landline and a cell phone, you will be more likely than someone with only one device or the other to receive a survey request. We take that fact into account when we apply statistical weighting to our data before tabulating the results.

The fact that you live in a densely populated place does not, by itself, make your likelihood of inclusion in a survey any smaller. We draw our samples of telephone numbers in proportion to the population in a given area, so everyone should have roughly the same opportunity to be contacted (though, as noted, with the caveat that the type of phone or phones you have will affect your probability of being contacted).

Scott Keeter, Director of Survey Research, Pew Research Center

Q. On the Religious Knowledge Survey devised by the Pew Forum on Religion & Public Life, I believe that the specified “correct” answer to question No. 4 — When does the Jewish Sabbath begin? — is, in fact, incorrect. The Sabbath was Saturday and almost all peoples 2000 or more years ago, including the Jews, began each day at sundown — not at midnight — not at sunrise — but at sundown. The Sabbath is Saturday and no day can start on the day before itself. Your question did not specify using “modern” convention. Therefore, your answer commits the fallacy of amphiboly (a faulty interpretation). I chose the correct answer on all the other questions and anyone who answered question No. 4 with your answer was, in fact, wrong.

Thank you for your wonderfully erudite inquiry; we are going to award you an honorary “15” on the religious knowledge quiz. Indeed, you would have had a very strong case if we had conducted the survey in Hebrew, in which case the correct answer would have been Yom haShabbat and not Yom Shishi. But the survey was NOT conducted in Hebrew. It was given in English, and 94% of Jews who took the survey answered this question by saying that the Jewish Sabbath begins on FRIDAY (4% of self-identified Jews gave other answers, and 2% said they didn’t know). The 94% of Jews who said “Friday” could all be guilty of amphiboly, of course. Worse things have been said. But there was no question in the entire survey about which there was greater unanimity among the members of a religious group as to the correct answer. Not even Mormons were as monolithic in their answers about the Book of Mormon.

Alan Cooperman, Associate Director, Research and Gregory A. Smith, Senior Researcher, Pew Forum on Religion & Public Life

Q. I try very hard to keep abreast of current events, and was therefore disappointed that I did not answer one of the questions on your online political news quiz correctly. Question No. 11 asks: “On which of these activities does the U.S. government currently spend the most money?” Your answer is national defense. However, I am under the impression that we spend the most money on entitlement programs and on interest on national debt. How have I been so misled?

Before we get to your question, please don’t feel disappointed by your test performance. When we asked this current set of news quiz questions to a national sample of adults in a November 2010 survey the average American answered less than half of them correctly. Furthermore, only 39% of Americans knew the right answer to this specific question about the federal budget. Having answered 11 of 12 questions correctly, you should certainly still consider yourself up to date with current events.

As for the specific question you emailed about, question No. 11 of the online news quiz asks the quiz taker to select from among four possible programs (education, interest on the national debt, Medicare and national defense) the activity the U.S. government spends the most money on.

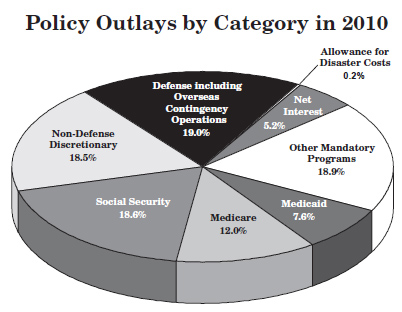

To help answer this question, pasted below is a chart created by the Office of Management and Budget (OMB) in August 2009 for the 2010 budget which illustrates how the federal government spends its money. (The OMB chart has also been reproduced on the website of the New York Times).

As you can see, the spending on national defense accounted for 19% of the federal budget in 2010. This is more than was spent on the other three programs you can choose from on the news quiz: Medicare (12%), interest on the debt (5.2%) and total non-defense discretionally spending (18.5%) — which includes all education spending plus numerous other programs.

You are correct that the government spends more on entitlements (Social Security plus Medicare plus Medicaid plus other mandatory programs) than national defense, but remember, the question asked you to choose which among the four specific programs received the most money. Taken individually, none of the government’s mandatory-spending programs receives more money than national defense. Social Security is close, but that was not one of the answer options.

Also, while interest on the debt is substantial — it accounts for nearly as much money as Medicaid — the spending on interest is still well below the amount allocated for national defense.

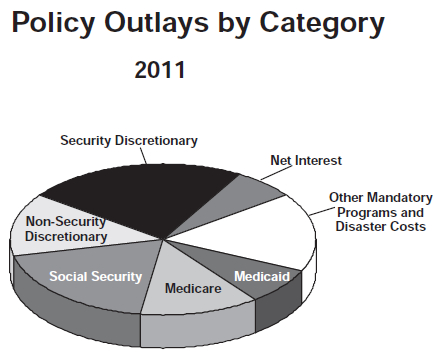

The chart above, however, does not contain the most recent federal budget data released by the government. Pasted below is a similar chart from the most recent OMB report — Budget of the U.S. Government: Fiscal Year 2011 (PDF). You can find the chart in the section titled “Summary Tables,” and a host of other information about government spending in the full report. While this chart, unfortunately, does not contain the percentages of government spending by category, you can clearly see that the outlays for national defense (labeled “security discretionary”) are again clearly larger than the outlays for Medicare, net interest and all non-security discretionary spending (which includes a small slice for education).

For a closer look at how the federal government spends its money, you may find these interactive graphics produced by the Washington Post and New York Times informative.

Richard C. Auxier, Researcher/Editorial Assistant, Pew Research Center

Q. When reporting on a poll that involves an “average” amount (not just on Pew Research reports, but on all that I have seen), there is seldom any indication of whether the average amount is a mean or a median. Also, no measure of variability is given such as standard deviation or a range. Is there a reason for this? For example, one can statistically compare “65% of voters preferred Candidate X last month, but 62% do this month” (sample sizes are always given). But without a measure of variability, one cannot compare “American white-collar workers spent an average of $9 a day for lunch last month and $8 a day last month.”

Our goal is to make sure that any reference to the average or typical amount also clearly indicates whether a mean or median is being used. But we don’t always achieve that goal. Your question is a good reminder that we should do better. We do make every effort to ensure that we use the most appropriate measure, given the underlying distribution of the variable. We sometimes even report both (for an example, see pages 31-32 of this report (PDF) by the Pew Internet & American Life Project on teens and their mobile phones).

You are correct that we rarely report measures of variability when we report a mean or median. But we are happy to make these available upon request. And more important, we have a standard rule not to state or imply that there are differences between groups or over time unless we have determined that the differences are statistically significant using appropriate tests.

Scott Keeter, Director of Survey Research, Pew Research Center

Q. How accurate are the statistics derived from Pew Research polls when applied to the population of the United States?

The accuracy of polls can be judged in different ways. One is the degree to which the sample of the public interviewed for the poll is representative of the whole population. For example, does the poll include the proper proportions of older and younger adults, of people of different races, or of men and women? Another standard for accuracy is whether the poll’s questions correctly measure the attitudes or relevant behaviors of the people who are interviewed. For both of these ways of judging accuracy, Pew Research’s polls do very well. We know that key characteristics of our samples conform closely to known population parameters from large government surveys such as the U.S. Census. Similarly, our final polls in major national elections have a very good track record of measuring intended behavior because they accurately predict the election results (we came within one percentage point of predicting the margin of victory for Barack Obama in 2008, and Republican candidates for the U.S. House in 2010).

To improve the accuracy of our polls, we statistically adjust our samples so that they match the population in terms of the most important demographic characteristics such as education and region (see our detailed methodology statement for more about how this is done). This practice, called weighting, is commonly used in survey research.

Scott Keeter, Director of Survey Research, Pew Research Center

Q. How statistically accurate is an online poll in which participants sign on to contribute their opinions? Would it be possible to get statistically accurate opinions about what the average American wants their taxes to go to through an online poll that allows the participant to indicate what percentage of his/her taxes would go for each of a number of purposes?

The accuracy of a poll depends on how it was conducted. Most of Pew Research’s polling is done by telephone. By contrast, most online polls that use participants who volunteer to take part do not have a proven record of accuracy. There are at least two reasons for this. One is that not everyone in the U.S. uses the internet, and those who do not are demographically different from the rest of the public. Another reason is that people who volunteer for polls may be different from other people in ways that could make the poll unrepresentative. At worst, online polls can be seriously biased if people who hold a particular point of view are more motivated to participate than those with a different point of view. A good example of this was seen in 1998 when AOL posted an online poll asking if President Clinton should resign because of his relationship with a White House intern. The online poll found that 52% of the more than 100,000 respondents said he should. Telephone polls conducted at the same time with much smaller but representative samples of the public found far fewer saying the president should resign (21% in a CBS poll, 23% in a Gallup poll, and 36% in an ABC poll). The president’s critics were highly motivated to register their disapproval of his behavior, and this resulted in a biased measurement of public opinion in the AOL online poll.

The American Association for Public Opinion Research recently released a detailed report on the strengths and weaknesses of online polling. The full text of the report can be found here. Keep in mind that there is nothing inherently wrong with conducting surveys online; we do many of them with special populations such as foreign policy experts and scientists. And some online surveys are done with special panels of respondents who have been recruited randomly and then provided with internet access if they do not have it.

You also ask whether online polling could determine how Americans would like to see tax revenue spent. Theoretically it could. Because of the complexity of the federal budget, any such poll would have inherent limitations, of course. And an online poll that people volunteered for would have the same potential flaws as other online polling.

Scott Keeter, Director of Survey Research, Pew Research Center

Q. How many people would say that they believed in God if they were able to answer with complete anonymity? That is, if they could take a ballot, check off “yes” or “no” in complete privacy and drop it in a box. The point of my question is that most people will say they believe in God in the presence of others when they think that it is the politically correct answer and, more importantly, that they will be judged on their answer. Their declaration of belief often has little to do with their personal convictions and beliefs. Would such a poll put the lie to the broadly held theory that approximately 80% of Americans believe in God?

Your question is about what survey researchers call a “social desirability effect” — the tendency of respondents in a survey to give the answer that they think they “should” give or that will cast themselves in the most favorable light. This is a real issue, one that we often consider in designing our surveys. Like all reputable polling organizations, the Pew Research Center safeguards the identity of the people who take our surveys. Still, you are right that some respondents may wonder whether their answers are completely confidential, or they may want to make a favorable impression on the interviewer asking the questions.

You are also right to think that people might answer certain kinds of questions more honestly if they could do something akin to dropping a secret ballot into a box. Research suggests that social desirability effects are more pronounced in interviewer-administered surveys (such as the telephone surveys we conduct at Pew) than in self-administered surveys in which people can fill out a questionnaire (either on paper or electronically) in complete privacy. For example, research conducted by the Center for Applied Research in the Apostolate (CARA) shows that among Catholics, estimates of church attendance (thought to be a socially desirable behavior) are higher in telephone surveys than in online surveys.

Several Pew Research Center surveys have included questions about belief in God, asking respondents “Do you believe in God or a universal spirit?” In our Religious Landscape Survey, a 2007 telephone survey with more than 35,000 respondents, 92% answered this question affirmatively. We have never asked this question on a self-administered survey of the U.S. population, so we can’t say exactly how results for this question might have been different if respondents had complete privacy and no interaction with an interviewer. However, in 2008, the Associated Press and Yahoo! News sponsored a series of online surveys conducted by Knowledge Networks among a nationally representative panel of Americans. The June 2008 wave of their poll included the same question about belief in God, and came up with very similar results (93% said yes, they believe in God or a universal spirit).

One other point worth noting is that in our Religious Landscape Survey, after asking respondents whether or not they believe in God or a universal spirit, we followed up and asked those who said “yes” an additional question: “How certain are you about this belief? Are you absolutely certain, fairly certain, not too certain, or not at all certain?” Presumably, even if some respondents expressed belief in God because that’s the socially desirable thing to do, there should be more leeway for people to express doubts after affirming their status as believers. But in fact, most people express little doubt about God’s existence, with more than 70% of the public saying they believe in God with absolute certainty.

The bottom line is that yes, you may be right, there could be some social desirability attached to expressing belief in God. But, even so, the evidence is strong that a very large majority of the U.S. public believes in God.

Alan Cooperman, Associate Director, Research and Gregory A. Smith, Senior Researcher, Pew Forum on Religion & Public Life

Q. What kind of response do you get to mail versus phone versus e-mail polling?

Self-administered surveys are making a comeback, constituting a growing percentage of survey research. This trend is driven by at least two changes. One is obvious: the growth and expansion of the reach of the internet, which is now used by about three-fourths of the general public and an even higher percentage of professionals and other groups we sometimes poll. That, along with growing expertise about how to construct and administer online surveys, has made it possible to conduct many surveys online that formerly were done by telephone or by mail. Over the past few years, the Pew Research Center has conducted online surveys of several interesting populations including foreign policy experts, scientists and journalists. However, with a couple of exceptions we are not conducting surveys of the general public online because there is currently no way to randomly sample adults by e-mail. We can sample and contact people in other ways, and attempt to get them to complete a survey online, but that creates other challenges for political and social surveys like the ones we do.

The second change driving the growth of self-administered surveys is something called “address-based sampling” or ABS. Mail surveys of the public have always drawn samples of addresses from lists, but only in the past few years have high quality address lists developed by the postal service been available to survey researchers. These lists, in conjunction with household data available from a range of lists such as those maintained by business and consumer database companies, now make it possible to construct very precise samples of households. These households can then be contacted by mail or even by telephone if a telephone number can be matched to them from commercial lists. Many survey organizations, including some working for the federal government, are using these address-based samples as an alternative to traditional telephone surveys. ABS provides a way to reach certain kinds of households that are difficult to reach with telephone surveys, such as those whose residents have only a cell phone. Interestingly, the response rates and the quality of data obtained from ABS surveys have been comparable to what’s obtained from telephone surveys, and the costs are similar as well.

By the way, sometimes people ask us why we don’t just conduct polls on our website. One of the answers to our list of Frequently Asked Questions addresses this. Take a look.

Scott Keeter, Director of Survey Research, Pew Research Center

Q. I see polls saying that Obama will win in 2012 over any Republican who runs. But when people are asked about Obama’s plans and programs, that agenda is, for the most part, rejected — as shown in the recent elections. So, are poll questions on the up and up?

Polls about presidential elections taken this far in advance of the actual election are very unreliable predictors of what will happen (see “How Reliable Are the Early Presidential Election Polls?“). For example, Bob Dole led Bill Clinton in a Gallup poll taken in February 1995, and Walter Mondale led Ronald Reagan in a February 1983 Gallup poll. As we know, there was no President Dole, and President Reagan won a huge landslide reelection victory in 1984. But it’s also important to keep in mind that the outcome of presidential elections involving incumbents depends more on the public’s judgment of performance than on approval or disapproval of specific policies. A lot of past history suggests that if the economy is judged to be improving significantly in the fall of 2012, President Obama is likely to win reelection.

Scott Keeter, Director of Survey Research, Pew Research Center

Q: I’ve seen some newspaper stories about your U.S. Religious Knowledge Survey. It seems that your categories include “Jews” and “atheists/agnostics.” Well, I’m BOTH Jewish and an atheist. Don’t you realize that there are people like me? How would you classify me?

Yes, lots of people have complex religious identities. We can’t account for every possible variation, but we’re able to track a wide variety of combinations — especially the more common ones — because we ask people not only about their primary religious affiliation but also about their specific beliefs and practices. This allows us to gauge, for example, how many people consider themselves Jewish but don’t believe in God; in the U.S. Religious Knowledge Survey, 15% of the Jewish respondents said they are non-believers.

How we would classify you, however, depends on exactly how you described yourself. First, we ask respondents about their religious affiliation. The full question wording is, “What is your present religion, if any? Are you Protestant, Roman Catholic, Mormon, Orthodox, such as Greek or Russian Orthodox, Jewish, Muslim, Buddhist, Hindu, atheist, agnostic, something else, or nothing in particular?” This measure is based wholly on self-identification: If you say that you are Jewish, we classify you as Jewish.

But that’s not the end of the story. For all those who name a particular religion, we follow up with questions about the specific denomination. We also ask Christians whether they consider themselves to be “born-again” or evangelical. We ask everyone — no matter what they’ve said in the religious affiliation question — how important religion is in their lives, how often they attend religious services and whether they believe in God or a universal spirit. For those who say they believe in God, we ask: “How certain are you about this belief? Are you absolutely certain, fairly certain, not too certain, or not at all certain?” In the Religious Knowledge Survey, as in many of our surveys, we also ask respondents about their views of the Bible and of evolution. And we ask about the religious affiliation of the respondent’s spouse or partner, if any.

Taken together, these questions allow people to express a rich diversity of religious identities. Not only, for example, are there Jews who say they do not believe in God, but we know from previous surveys that there are also self-described atheists who say they DO believe in God, agnostics who say they attend worship services every week, and many other combinations. When we report the results of a poll such as the Religious Knowledge Survey, however, we tend to stick to the larger groups and, following good survey research practices, we do not separately analyze and report results for groups that have very small numbers in our sample.

Alan Cooperman, Associate Director, Research, Pew Forum on Religion & Public Life

(Note: How much do you know about religion? Take the religious knowledge quiz to find out.)

Q. What are current global attitudes about climate change?

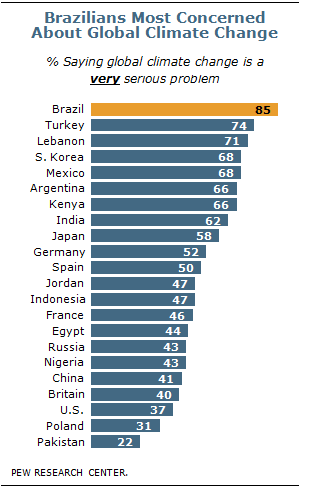

Our international polling shows that publics around the world are concerned about climate change. In the recent spring 2010 Pew Global Attitudes survey, majorities in all 22 nations polled rate global climate change a serious problem, and majorities in ten countries say it is a very serious problem.

There are some interesting differences among the countries included in the survey. Brazilians are the most concerned about this issue: 85% consider it a very serious problem. Worries are less intense, however, in the two countries that emit the most carbon dioxide — only 41% of Chinese and 37% of American respondents characterize climate change as a very serious challenge.

Even though majorities around the globe express at least some concern about this issue, publics are divided on the question of whether individuals should pay more to address climate change. In 11 nations, a majority or plurality agree that people should pay higher prices to cope with this problem, while in 11 nations a majority or plurality say people should not be asked to pay more.

For more on this topic, see Chapter 8 of the June 17, 2010 report by the Pew Global Attitudes Project, “Obama More Popular Abroad Than At Home, Global Image of U.S. Continues to Benefit.”

Richard Wike, Associate Director, Pew Global Attitudes Project

Q. The debates over global warming, abortion, and offshore drilling are habitually buttressed with what percent of the public believes this or that, as if the majority should rule on such issues. Would you consider a poll to determine how many people believe in ghosts, UFOs, astrology, evolution, lucky numbers, black cats, knocking on wood, WMDs in Iraq, Jews control America, Africa is a country, afterlife, reincarnation, etc.? Then after reporting that such and such a percent of Americans believe we should do X, you might add that this is the same percentage of Americans that believe in, say, flying saucers. By no means am I making the case that polls are useless or harmful. I just believe that we should view public opinion for what it is, not a way to find truth or the right answer necessarily.

The Pew Research Center is a non-partisan fact tank; it does not take sides in policy disputes. What our polls provide is a valuable information resource for political leaders, journalists, scholars and citizens. How those political actors choose to use that information is up to them. We just ask that they cite our polls accurately and in context.

We do, in fact, provide such findings as:

— Among the public, one-in-four (25%) believe in astrology (including 23% of Christians); 24% believe in reincarnation; nearly three-in-ten (29%) say they have been in touch with the dead; almost one-in-five (18%) say they have seen or been in the presence of ghosts.

— While 87% of scientists say that humans and other living things have evolved over time and that evolution is the result of natural processes such as natural selection, just 32% of the public accepts this as true.

We also conduct polls to educate the public on how educated the public is about politics and policy. However, we take no position on what, if anything, these opinions and understandings about public affairs signify for public policy.

Jodie T. Allen and Richard C. Auxier, Pew Research Center

Q. In your descriptions about how you conduct your polls you use the term “continental United States.” Does that mean you include Alaska or not? And since you presumably do not include Hawaii and the other Pacific Islands that are part of the U.S. do you make any effort to adjust your results for these omissions? And what about Puerto Rico and the U.S. Virgin Islands? There are, in fact, techniques for adjusting results in such circumstances that are mathematically acceptable but, if used, such adjustments and the methods should be disclosed.

The definition of “continental United States” that we and most other major survey organizations use includes the 48 contiguous states but not Alaska, Hawaii, Puerto Rico and the other U.S. territories. We do not interview in Hawaii and Alaska for practical reasons, including time differences and cost. Strictly speaking, our results are generalizable to the population of adults who live in our definition of the continental United States. (It should be noted, however, that our surveys cover about 98% of the total population in all 50 states, Puerto Rico and the other areas you mention.) To correct any minor skews introduced by sampling, we adjust (“weight”) our sample to match the Census Bureau estimates of the age, gender, race and educational attainment characteristics of the U.S. adult population. We also adjust the sample for other characteristics, such as household size. You can find more details in the detailed descriptions that accompany every major report that we release. Here, for example, is a link to the methods chapter in our Economy Survey conducted in May.

Rich Morin, Senior Editor, Pew Research Center

For more “Ask the Expert” questions and answers click here.