The majority of Pew Research Center’s international survey work is conducted either through face-to-face or telephone interviews. As the survey landscape changes internationally, we are exploring the possibility of using self-administered online polls in certain countries, as we do in the United States.

If we move our surveys online, we may need to make other changes so that data from new projects is comparable to data from older ones. For instance, online surveys do not use an interviewer, an important difference from our traditional in-person and phone surveys. Those differences could bias our results. Bridging the gap between interviewing formats raises many questions, so we embedded some experiments into recent surveys to get some answers.

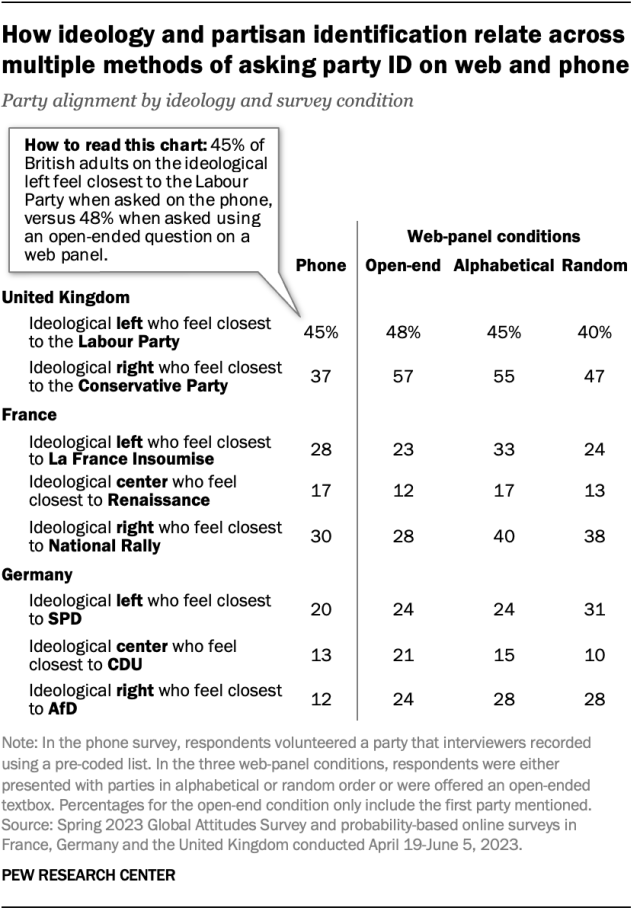

In the sections below, we walk through an experiment where we tested online surveys against phone surveys, drawing on data from France, Germany and the United Kingdom. We compare the three ways we asked about partisanship on the web with a traditional measurement obtained over the phone, concluding with what we learned.

Related: How adding a ‘Don’t know’ response option can affect cross-national survey results

How we’ve asked about partisanship in past telephone surveys

Our annual phone polls ask people in France, Germany and the UK an open-ended question about their partisan affiliation. In the UK, for example, a phone interviewer would ask a respondent, “Which political party do you feel closest to?” then wait for a response and record the answer.

We compared three different online survey methods to see which one would most closely replicate our phone results.

How we asked about partisanship in our new online survey experiments

Open-ended questions

The first method we tested asked respondents about their partisan affiliation in an open-ended format. We gave people a textbox and asked them to enter the name of the party they feel closest to. One benefit of this method is that people can offer relatively new parties or alliances that we have yet to include in our lists and they won’t feel limited by the options listed, even if an “other, please specify” box is included.

But open-ends can be messy. They require a researcher to comb through them, standardizing spelling and making choices, such as whether saying things like “the right” or the name of a leader should be counted together. In the UK, a respondent may say Tories, Conservatives, the Conservative Party, Rishi Sunak or other variations on these answers – and researchers have to determine what they meant and code it accordingly. This takes time and, in the case of France and Germany, some foreign language knowledge. Open-ended questions also tend to have higher nonresponse rates than closed-ended questions.

Closed-ended questions

We also asked about partisan affiliation using two different close-ended survey question formats. Each format listed the parties in a different order:

- Alphabetical order: Alphabetical lists present a clear ordering principle for respondents, making it easy to know where to look for their preferred party. But there are some complications with this approach, such as the fact that many parties are known by multiple names or abbreviations. In France, President Emmanuel Macron’s party is currently called Renaissance, but was formerly known as En Marche or LREM – all of which would appear in different places in an alphabetical list. Also, people who feel some allegiance to multiple parties might choose a party that appears earlier in the list, simply because they saw it first. For example, if a Scot were reading the list and saw the Labour Party first, they might select this, despite feeling closer to the Scottish Labour Party.

- Random order: We also tested randomly ordering the parties. While this might make it harder for respondents to find their party in the list, it also might make them more likely to read all response options in detail.

In the sections below, we compare these three methodswith our phonetrend question, which we fielded while we were experimenting with the web panels.

What we found

In all three countries, the closed-ended web formats we tested – both the alphabetical list and the randomized list – were more similar to our traditional phone trends than the open-ended question.

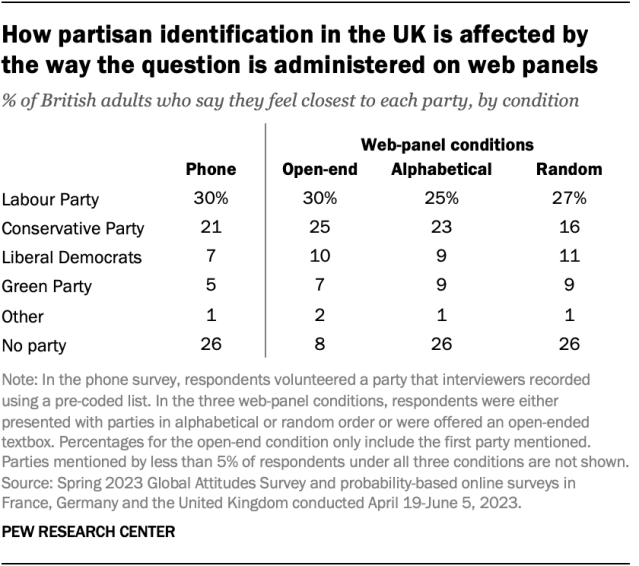

United Kingdom

In the UK, both the alphabetical and the randomized closed-ended web formats resulted in the most similar results to our traditional phone trends. For example, in both the closed-ended formats and on the phone, around a quarter say no party represents them; only 8% say this when given an open-ended box online.

Estimates for many of the largest parties are also quite comparable across question formats – though the share who say the Conservative Party most represents them in the random list (16%) is somewhat lower than in the alphabetical list (23%). The alphabetical results tend to be closer to what we find in our phone polling.

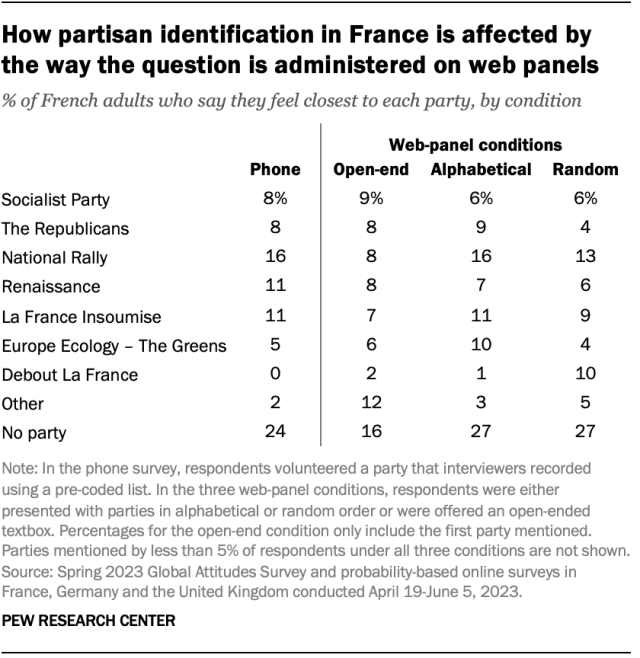

France

In France, too, the closed-ended web formats appear to mirror our traditional phone trends more closely. This is most evident when we look at the share who say no party represents them.

Also, in the open-ended format, the share saying “other” is quite a bit higher than with the other methods. Some of these volunteered answers included NUPES – the name of a left-wing coalition that includes France Insoumise, Europe Ecology, the Socialist Party and the Communist Party – and right-wing Reconquête! Neither of these were included in the closed-ended lists.

The alphabetical format appears to yield results more similar to traditional phone polling than the randomly ordered list.

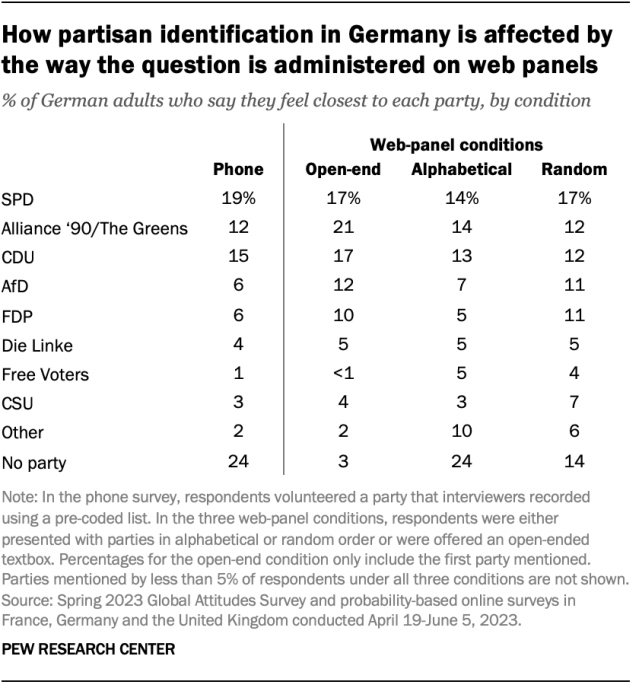

Germany

Findings are similar in Germany. The open-ended response produces a lower share of people who say no party represents them than in the closed-ended web formats or in our traditional phone polling.

The alphabetical list also appears to be most similar to our traditional phone polling.

The connection between ideology and partisanship

We also examined whether ideology and partisan identification are related in similar ways across the various question formats. For example, we know that in our UK phone polls, 45% of Britons on the ideological left describe themselves as Labour Party supporters. Our goal was to find a measure on the web panels that replicates this relationship.

After looking into the relationship between ideology and partisan identification across the three question styles on the web and how they relate to the phone survey, it appears that the alphabetical one compares most favorably with the phone.

In the UK, identical shares of the left identify as Labour supporters in the alphabetical list and on the phone.

Still, there are some large differences between the two question formats. This is the case with right-leaning Britons: On the phone, 37% identify with the Conservative Party, while all web versions differ from this by at least 10 percentage points.

The alphabetical list format performs better than the random or open-ended questions, but no method consistently matches the phone results.

Conclusion: How should we ask about partisanship on online surveys going forward?

These results suggest that an alphabetical list is the best way to replicate our existing phone trends in online surveys.

Still, more experimentation may be warranted. For example, we could potentially address some of the difficulties presented by the open-ended question with a more interactive textbox that could search and present a pre-coded list to respondents. This would leave a fully blank space for text entry only for people who could not find their preferred answer on the existing list.

This method might provide some of the benefits presented by an open-end – such as the ability to mention new political parties or alliances – but without the effort required for the research team to hand code and analyze all of the answers that respondents volunteered.