(Related posts: An intro to topic models for text analysis, Making sense of topic models, Overcoming the limitations of topic models with a semi-supervised approach, How keyword oversampling can help with text analysis and Are topic models reliable or useful?)

My previous post in this series showed how a semi-supervised topic modeling approach can allow researchers to manually refine topic models to produce topics that are cleaner and more interpretable than those produced by completely unsupervised models. The particular algorithm we used was called CorEx, which provides users with the ability to expand particular topics with “anchor words” that the model may have missed. Using this semi-supervised approach, we were able to train models on a collection of open-ended Pew Research Center survey responses about sources of meaning in life and arrive at a set of topics that seemed to clearly map onto key themes and concepts in our data.

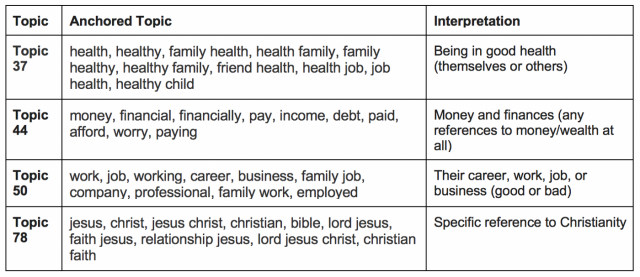

Next, we faced the task of interpreting the topics and assessing whether our interpretations were valid. Here are four topics that seemed coherent after we improved them using our semi-supervised approach:

Interpreting topics from a model can be more difficult than it may initially seem. Understanding the exact meaning of a set of words requires an intimate understanding of how the words are used in your data and the meaning they’re likely intended to convey in context.

Topic 37 above appears to be a classic example of an “overcooked” topic, consisting of little more than the words “health,” “healthy,” and a collection of phrases that contain them. At first, we were unsure whether we’d be able to use this topic to measure a useful concept. While we were hoping to use it to identify survey responses that mentioned the concept of health, we suspected that even our best attempts to brainstorm additional anchor words for the topic might still leave relevant terms missing. Depending on how common these missing terms were in our data, the topic model could seriously understate the number of people who mentioned health-related concepts.

To investigate, we read through a sample of responses and looked for ones that mentioned our desired theme. To our surprise, we realized that this particular “overcooked” topic was not a problem in the context of our particular dataset. Of the responses we read (many of which used a wide variety of terms related to health), we found very few that mentioned the theme of health without using a variant of the word itself. In fact, the overwhelming majority of responses that used the term appeared to refer specifically to the theme of being in good health. Responses that mentioned health problems or poor health were far less frequent and typically used more specific terms like “medical condition,” “medication,” or “surgery.”

Based on the specific nature of our documents and the context of the survey prompts we used to collect them, we decided that we could not only use this “overcooked” topic, but we could also assign it a more specific interpretation — “being in good health” — than might otherwise have been possible with different data.

However, this turned out to be a unique case. For other topics, separating out both positive and negative mentions was often impossible.

For example, the language our survey responses used to describe both financial security and financial difficulties was so diverse and overlapping that we realized we wouldn’t be able to develop separate positive and negative anchor lists and train a model with two separate topics related to finances. Instead, we had to group all of our money-related anchor words together into Topic 44, which we could only interpret as being about money or finances in general. We manually coded a sample of these responses and found that 77% mentioned money in a positive light, compared with 23% that brought it up in a neutral or negative manner. But even our manually-refined semi-supervised topic model couldn’t be used to tell the difference.

Clearly, context matters when using unsupervised (or even semi-supervised) methods. Depending on how they’re used, words found in one set of survey responses can mean something entirely different in another set, and interpretations assigned to topics from a model trained on one set of data may not be transferable to another. Since algorithms like topic models don’t understand the context of our documents — including how they were collected and what they mean — it falls on researchers to adjust how we interpret the output based on our own nuanced understanding of the language used.

Between the two semi-supervised CorEx topic models that we trained, we identified 92 potentially interesting and interpretable topics. To test our ability to interpret them, we gave each one a short description, including some additional caveats based on what we knew about the context of each topic’s words in our corpus:

For each topic, we first drew a small exploratory sample consisting of some responses that contained the topic’s top words and others that didn’t. A member of the research team then coded each response based on whether or not it matched the label we had given the topic. After coding all of the samples, we used Cohen’s Kappa, a common measure of inter-rater reliability, to test how well the topic models agreed with the descriptions that we had given the topics.

Some topics resulted in particularly poor agreement between the model and our own interpretation, often because the words in the topic were used in so many different contexts that its definition would have to be expanded to the point that it would no longer be meaningful or useful for analysis.

For example, one of the topics we had to abandon dealt with opinions about politics, society and the state of the world. Some of the words in this topic were straightforward: “politics,” “government,” “news,” “media,” etc. While there were a handful of false positives for these words — responses in which someone wrote about their career in government, or recently receiving good news — the vast majority of responses that mentioned these words contained opinions about the state of the world, in line with our interpretation. But a single word, “world” itself wound up posing a critical problem that forced us to give up on refining this topic.

In our particular dataset, there were a lot of respondents that opined about the state of the world using only general references to “the world” — but there were also many respondents that used “world” in a non-political, personal context, such as describing how they wanted to “make the world a better place” or “travel the world.” As a result, including “world” as an anchor term for this topic produced numerous false positives, but excluding it produced many false negatives. Either way, our topic model would either be overstating or understating the topic’s prevalence to an unacceptable degree.

In this case, we were able to narrow our list of anchor terms to focus on the more specific concept of politics, but other topics presented similar challenges and we were forced to set some of them aside. As we continued reviewing our exploratory samples, we also noticed that some topics that initially seemed interesting — like “spending time doing something” and “thinking about the future” — turned out to be too abstract to be analytically useful, so we set these aside, too.

From our initial 92 topics, we were left with 31 that seemed analytically interesting, could be given a clear and specific definition, and had encouraging levels of initial reliability, at least based on our non-random exploratory samples. Drawing on the insights we’d gained from viewing these topics in context, we refined them further and added or removed words from our anchor lists where it seemed useful.

For our final selection of topics, we drew new random samples of 100 documents for each topic, this time to be coded by two different researchers to determine whether our tentative topic labels were defined coherently enough to be understood and replicated by humans.

Unfortunately, we found that seven of these 31 topics resulted in unacceptable inter-rater reliability. Though they had seemed clear on paper, our labels turned out to be too vague or confusing and we couldn’t consistently agree on which responses mentioned the topics and which did not. Fortunately, we had acceptable rates of agreement on the remaining 24 topics — but for a few of the rarer ones, that wasn’t good enough. In an upcoming post, I’ll explain how we used a method called keyword oversampling to salvage these topics.