Polls can draw a lot of attention, particularly when they address high-profile issues like the president’s standing with the U.S. public or the way Americans feel about guns. But many people who read about polls may not be very familiar — or familiar at all — with the way they’re done. That can bring unique challenges for journalists and other writers (like me) who are tasked with conveying poll results in a way that’s understandable and accurate.

Fortunately, there are some simple guidelines writers can follow to minimize the risk of readers misunderstanding or misinterpreting poll findings. My colleagues at Pew Research Center shared some of these tips with me when I first joined the world of survey research, and I’ve since learned a few others that are worth passing along. In this post, I’ll walk through five pointers I’ve found especially helpful.

This is by no means an exhaustive list. If you have your own tips, feel free to share them in the comments section below.

Always be clear about who was surveyed.

Large polling organizations like Pew Research Center and Gallup conduct surveys of many different populations, so it’s important to be as clear as possible in your writing about who, exactly, was polled.

If you’re writing about a political survey fielded in the United States during an election year, did it focus on the opinions of all U.S. adults, only those of registered voters, or “likely” voters? These are overlapping, but not identical, groups of people. President Donald Trump’s approval rating, for example, has sometimes been higher in polls of likely voters than in polls of all U.S. adults. Bearing this in mind, it’s important to identify the specific group you’re writing about quickly and clearly — a practice researchers sometimes call “defining the universe” — and reiterate that universe throughout your writing.

This approach isn’t just important when writing about political questions. It applies in many other contexts. In 2016, for example, Pew Research Center surveyed nearly 8,000 U.S. police officers working in departments of 100 officers or more, asking them questions about many aspects of their professional lives. The survey provided an important look at the views of these officers at a time when police work was under a national microscope. But because the survey focused on officers in comparatively large departments, it did not provide information on the views of all U.S. police officers, including those in small, rural departments. It’s best to make a survey’s parameters clear right away.

Also bear in mind that the universe you’re writing about can change within the same survey. In an early 2019 survey, we found that 69% of one universe (U.S. adults) use Facebook, and that 51% of another universe (Facebook-using U.S. adults) visit the platform several times a day. Be careful not to mix and match universes.

Let your readers know when the survey was conducted.

This is standard practice when writing about polls for the simple reason that public opinion can and does change. To use a recent example, survey findings about how frequently Americans smoke e-cigarettes, or “vape,” might look different today than a year ago, given the outbreak of a vaping-related illnessthat has drawn national attention.

It’s not necessary to repeat a survey’s field dates throughout your writing, as you should with the population surveyed (“all U.S. adults,” “registered voters,” etc.). But a good rule of thumb is to mention the field dates near the top of your analysis. And if you’re surfacing poll findings from a long time ago — or ones you suspect may have changed in important ways — it’s safer to use the past tense than the present tense to describe them: “About one-in-ten Americans said they regularly or occasionally smoke e-cigarettes,” rather than “say.”

Stay faithful to the way survey questions were worded.

Writers, myself included, tend to see repetitive language as dull language. Since we don’t want to bore readers, we like to vary the way sentences are phrased and structured. But this inclination can lead to problems when writing about survey questions.

Small differences in question wording can bring out big differences in the way respondents answer. That means writers should be careful when paraphrasing questions (and answers). Straying too far from the question wording can have the effect of inadvertently putting words in respondents’ mouths.

Consider a 2016 survey in which we asked Americans about a contentious issue in the news: whether businesses that provide wedding services “should be required to provide those services to same-sex couples just as they would to all other customers,” or whether such businesses “should be able to refuse to provide those services to same-sex couples if the business owner has religious objections to homosexuality.”

Both sides of the question include important nuance — nuance that might well influence Americans’ opinions on the matter. It would not be a fair characterization of the question, for example, to write that 48% of U.S. adults “say businesses should be able to refuse services to same-sex couples” withoutincluding the important context about the hypothetical business owner’s “religious objections to homosexuality.” Another important piece of context: The question asked specifically about wedding-related businesses, not just any businesses.

Even though it might make your sentences longer and more repetitive, the safest way to ensure you’re summarizing a poll fairly is to hew closely to the question wording — especially on first or second reference, or in cases where your words might otherwise be taken out of context (like in a tweet). If you do venture into paraphrasing in later references, do it thoughtfully.

Pay attention to the margins of error.

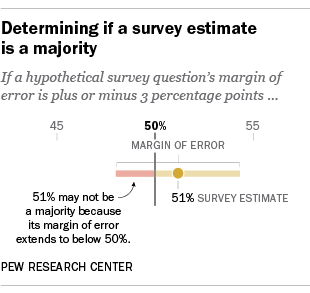

Readers may have a broad understanding of the term “margin of error,” but they may not understand how it applies when they encounter specific survey results. Here’s an example: While most average people would probably consider 51% a “majority,” it’s usually not a “majority” in the context of a survey finding. That’s because the margin of error means the true figure might be below the 50% threshold, as this chart shows:

In Pew Research Center publications based on survey data, you’ll almost never see 51% characterized as a “majority.” Instead, we’ll characterize it as “about half,” or something similar. (In fact, we usually won’t refer to percentages like 52% or 53% as a “majority,” either.) We do this to prevent readers from walking away with an incorrect understanding of what is and isn’t a “majority” view.

The same principle applies when comparing survey findings between different subgroups of people. In a fall 2018 survey, 27% of Hispanic U.S. adults — compared with 25% of black adults — said election rules in their state are not fair. But this 2-point difference is not statistically significant after taking the margin of error into account. Since that’s the case, writers should just say the views of Hispanic and black adults on this question are similar or about the same.

The same goes when talking about changes over time. In a recent survey, 65% of U.S. adults said they’ve read a print book within the past year, compared with 67% who said this in 2018. This slight change is not statistically significant, so there hasn’t been a “decline” in book reading.

It’s good to provide context, but it’s dangerous to ascribe causality.

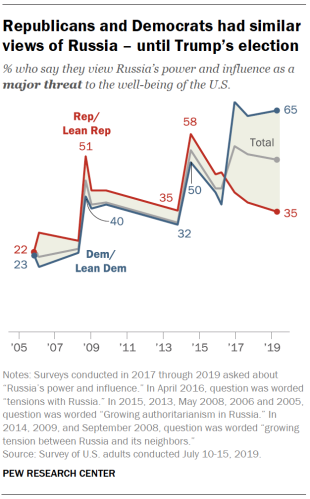

For many years, Pew Research Center has been asking Americans whether they perceive Russia to be a “major threat” to the well-being of the United States. And in most of those surveys, Republicans and Democrats didn’t have fundamentally different opinions. That changed dramatically after Trump’s election in 2016, when Democrats became far more likely to see Russia as a major threat and Republicans became less likely to hold this view:

What caused such a dramatic change? Someone who follows the news might reasonably infer that the sudden gulf between Democrats and Republicans had something to do with partisan differences over Russia’s role in the 2016 presidential election. But there’s no way to know what the respondents had in mind when they answered the question.

In a situation like this, it can be helpful to provide some of the underlying news context that might explain the survey findings (by noting, for example, that there have been high-profile allegations and investigations about Russia’s role in the 2016 election). But it’s important to do this without claiming a causal relationship, since you can’t be sure that one exists.

Conclusion

All of these tips fall under the umbrella of one bigger piece of advice: Writers should be clear with readers about what a particular poll can and can’t tell them. The results of any poll depend on basic factors including who was surveyed, when the survey was conducted and what specific questions were asked, in addition to other, more technical factors associated with the survey’s design and methodology. Writers probably can’t prevent every possible misunderstanding about how polls work, but we can take some easy steps to avoid the most common sources of confusion.