Pew Research Center conducts surveys all around the world on a variety of topics, including politics, science and gender. The source questionnaires for these surveys are developed in English and then translated into target languages. A 2014 survey about religion in Latin America, for instance, was translated into Spanish, Portuguese and Guarani.

Other projects require more translations. For example, between 2019 and 2020, we fielded a large national survey in India on religion and national identity, as well as gender roles, with interviews conducted in 17 languages. (See below for a short description of how we identify our target languages for translation.)

In this post, we’ll explain current best practices in survey questionnaire translation, based on academic literature, and discuss how the Center has applied these approaches to our international work.

Best practices in questionnaire translation

Best practices for translating multilingual surveys have evolved considerably over time. An approach called “back translation” was once the standard quality control procedure in survey research, but the “team approach,” also known as the “committee approach,” is now more widely accepted.

Back translation involves the following process:

- A translator translates the source language questionnaire into the target language.

- A different translator translates the target language questionnaire back into the source language (hence the term “back translation”).

- Researchers check how well the back translation aligns with the source language questionnaire.

- Researchers use the comparison between the two documents to draw conclusions about potential errors in the target language translation that need to be remedied.

The back translation technique is meant to assure researchers that translations into a target language are asking the same questions as the original questionnaire. Back translations can identify mistranslations and be time — and resource — efficient. Researchers often use back translations to make a list of potential issues for translators to investigate.

Yet research has shown that this technique only detects some translation flaws. For example, a poor initial translation — one that uses awkward sentence structure and literal language — could be accompanied by a good back translation into the source language. The back translation might smooth out the poor translation choices made by the initial translator, making it unlikely that the first bad translation would be detected.

On the flip side, a poor back translation may produce false alarms about the initial translation, adding time and cost to the process. Back translation is further faulted for not identifying how translators should fix problems.

Today, many researchers prefer the team approach to survey questionnaire translation. The team approach generally requires translators, reviewers and adjudicators who bring different kinds of expertise to the translation process, like best practices in translation techniques, native mastery over the target language, research methods expertise and insight on the specific study design and topic. This approach has the advantages of being more reliable in diagnosing multiple kinds of translation issues and identifying how to fix them. One application of this approach is called the TRAPD method:

- Translation: Two or more native speakers of the target language each produce unique translation drafts.

- Review: The original translators and other bilingual experts in survey research critique and compare the translations, and together they agree on a final version.

- Adjudication: A fluent adjudicator, who understands the research design and the subject matter, signs off on the final translation.

- Pretest: The questionnaire is tested in the target language in a small-scale study to verify or refine the translation.

- Documentation: All translations, edits and commentary are documented to support decision making. Documentation also helps streamline future translation work in the same language by reusing text that has already been translated, reviewed and tested.

Pew Research Center’s team approach for international surveys

Pew Research Center does not have a team of trained linguists on staff, so in each project we collaborate with two external agencies to translate our international questionnaires. The initial translation is done by a local team contracted to conduct the survey in a given country. That translation is later reviewed for accuracy and consistency by a separate verification firm. (The verification firm also reviews issues that arise across languages within the same survey project to make sure we make translation decisions consistently.)

Our team approach, which includes extensive documentation about why individual decisions are made throughout the process, allows the survey and subject matter experts at the Center to serve as adjudicators when disagreements arise between translators and verifiers.

Here’s a closer look at our translation process:

Step 1: Translation assessment

International survey research at Pew Research Center tends to be interviewer-administered — that is, questionnaires are read aloud to respondents either in person or over the phone — as opposed to the self-administered web questionnaires we typically field in the United States. We design our interviewer-administered English questionnaires to be conversational, and we want that to come through in other languages and cultures, too.

A conversational tone, though, can sometimes introduce phrasing that is difficult to translate. In drafting questions, the team pays special attention to American English idioms and colloquialisms that need to be clarified at the outset. In such instances, we provide instructions to translators on how best to convey our meaning. For example, we asked people in India which of two statements was “closer to” their opinion. Since we did not mean physical proximity, we provided alternative phrases as examples: “most similar to” and “most agrees with.” In this way, we hopefully preempted some translation issues.

Members of the translation teams at both external agencies also do initial reviews of the questionnaire to see if other idioms, complex sentence structures or ambiguous phrasings need to be adjusted in the source questionnaire, or whether translation notes should be provided to the translation team.

Step 2: Translation

The local field agency carefully reviews and translates the full English questionnaire into the local language(s).

Step 3: Verification and discussion

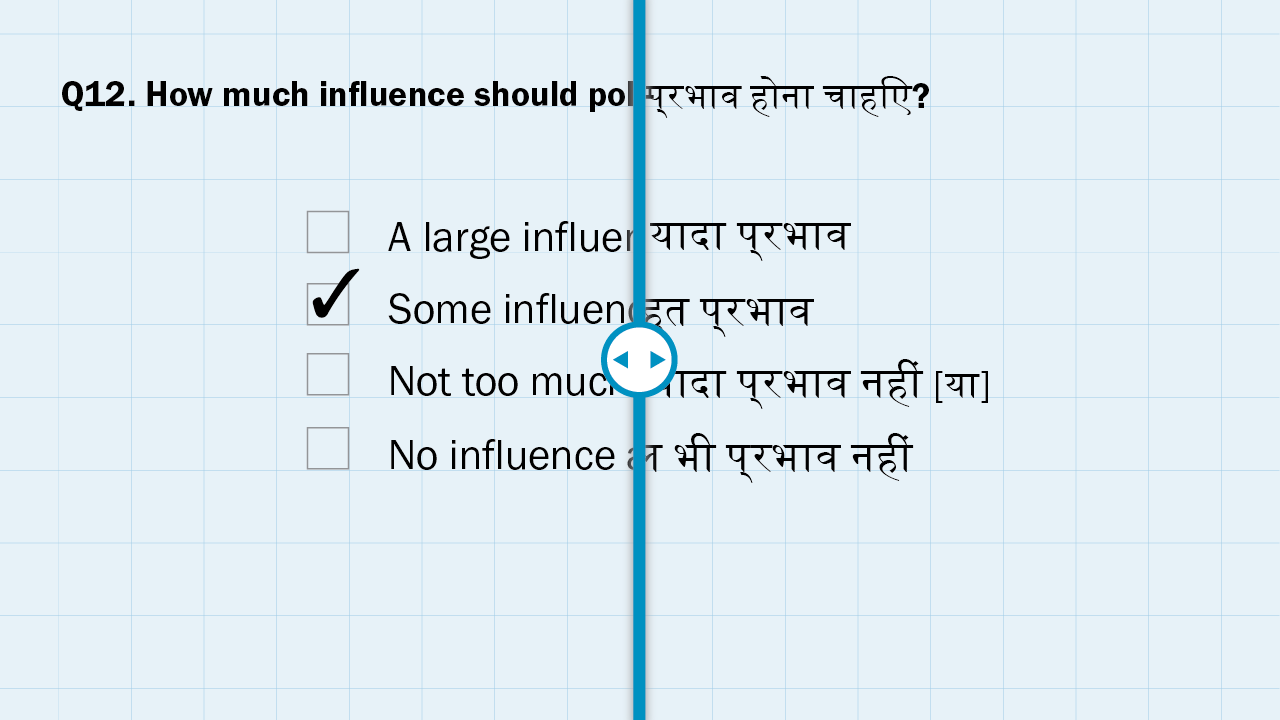

The verifying agency evaluates the questionnaire translation line-by-line, noting translations with which they agree or disagree. Verifiers leave comments explaining any disagreements and offer alternate translations. The annotated translation is then sent back to the field agency’s translators, who comment on each item and sometimes propose alternate translations.

Many translation issues are easily resolved. For example, a verifier may catch spelling, typographic or grammatical errors in the translation. But in other cases, the verifier may disagree with the translator on how a particular word or phrase should be translated at the level of meaning, and there could be more than one valid way to translate the text, each with its own strengths and weaknesses. In such cases, the translator and verifier typically correspond until consensus is reached. They can also ask Center researchers to clarify the intent of a question or word. This process typically involves at least two rounds of back-and-forth discussion to reach agreement on all final translations.

In our survey of India, for example, we sought to ask the following question: “Do your children ever read scripture?” The question was initially translated into Hindi in a way that specifically referred to Hindu scriptures, even though the question was asked of all respondents, including Muslims and Christians. The verifier suggested a more general term, which improved the accuracy of the translation.

Step 4: Testing translations

The Center tests survey question translations before they are fielded in order to refine our questionnaires. In India, we conducted 100 pretest interviews across six states and union territories — including participants speaking 16 different local languages — to assess and improve how respondents comprehended the words and concepts we used in our questions. This process involved feedback from our interviewer field staff, who assessed how respondents understood the questions and how easy or awkward it was for the interviewers to read the questions aloud. (For more information about our testing procedures for the project in India, see “Developing survey questions on sensitive topics in India.”)

Choosing into which language(s) to translate

The Center generally translates international questionnaires into languages that enhance the national representativeness of our survey sample. We always include the national or dominant language(s) in a country. To determine which, if any, additional languages to use, we look at the share of the population who speak other languages and their geographic distribution. We also consult our local partners about the languages that may be a primary language of an important subgroup of interest, such as an ethnic or religious minority group.

Our 2019-2020 survey of India, for instance, included an oversample in the country’s least-populated Northeast region to ensure we could robustly analyze the attitudes and behaviors of Hindus, Muslims and Christians living there. This oversampling led us to translate our questionnaire into languages only spoken by small segments of the national population. One such language was Mizo, an official language of the state of Mizoram — even though Mizoram accounts for less than 0.1% of the Indian population.